Intro

Note: this guide is a work-in-progress.

What’s this guide about?

It’s hard to say exactly what this guide is about. It’s easiest to say that it is primarily about verifying multi-threaded concurrent code in Verus, and in fact, we developed most of this framework with that goal in mind, but these techniques are actually useful for single-threaded code, too. We might also say that it’s about verifying code that needs unsafe features (especially raw pointers and unsafe cells), though again, there are plenty of use-cases where this does not apply.

The unifying theme for the above are programs that require some kind of nontrivial ownership discipline, where different objects that might be “owned independently” need to coordinate somehow. For example:

- Locks need to manage ownership of some underlying resource between multiple clients.

- Reference-counted smart pointers need to coordinate to agree on a reference-count.

- Concurrent data structures (queues, hash tables, and so on) require their client threads to coordinate their access to the data structure.

This kind of nontrivial ownership can be implemented through Verus’s

tokenized_state_machine! utility, and this utility will be the main

tool we’ll learn how to use in this guide.

Who’s this guide for?

Read this if you’re interested in learning how to:

- Verify multi-threaded concurrent code in Verus.

- Verify code that requires “unsafe” code in unverified Rust (e.g., code with raw pointers or unsafe cells)

Or if you just want to know what any of these Verus features are for:

- Verus’s

state_machine!ortokenized_state_machine!macros - Verus’s

trackedvariable mode (“linear ghost state”).

This guide expects general familiarity with Verus, so readers unfamiliar with Verus

should check out the general Verus user guide

first and become proficient at coding within its spec, proof, and exec modes,

using ghost and exec variables.

Further Reading

For a fully comprehensive account, please see Verifying Concurrent Systems Code.

Tokenized State Machines

High-Level Concepts

The approach we follow for each of the examples follows roughly this high-level recipe:

- Consider the program you want to verify.

- Create an “abstraction” of the program as a tokenized state machine.

- Verus will automatically produce for you a bunch of ghost “token types” that make up the tokenized state machine.

- Implement a verified program using the token types

That doesn’t sound too bad, but there’s a bit of an art to it, especially in step (2). To build a proper abstraction, one needs to choose an abstraction which is both abstract enough that it’s easy to prove the relevant properties about, but still concrete enough that it can be properly connected back to the implementation in step (4). Choosing this abstraction requires one to identify which pieces of state need to be in the abstraction, as well as which “tokenization strategy” to use—that’s a concept we’ll be introducing soon.

In the upcoming examples, we’ll look at a variety of scenarios and the techniques we can use to tackle them.

Tutorial by example

In this section, we will walk through a series of increasingly complex examples to illustrate how to use Verus’s tokenized_state_machine! framework to verify concurrent programs.

Counting to 2

Suppose we want to verify a program like the following:

- The main thread instantiates a counter to 0.

- The main thread forks two child threads.

- Each child thread (atomically) increments the counter.

- The main thread joins the two threads (i.e., waits for them to complete).

- The main thread reads the counter.

Our objective: Prove the counter read in the final step has value 2.

// Ordinary Rust code, not Verus

use std::sync::atomic::{AtomicU32, Ordering};

use std::sync::Arc;

use std::thread::spawn;

fn main() {

// Initialize an atomic variable

let atomic = AtomicU32::new(0);

// Put it in an Arc so it can be shared by multiple threads.

let shared_atomic = Arc::new(atomic);

// Spawn a thread to increment the atomic once.

let handle1 = {

let shared_atomic = shared_atomic.clone();

spawn(move || {

shared_atomic.fetch_add(1, Ordering::SeqCst);

})

};

// Spawn another thread to increment the atomic once.

let handle2 = {

let shared_atomic = shared_atomic.clone();

spawn(move || {

shared_atomic.fetch_add(1, Ordering::SeqCst);

})

};

// Wait on both threads. Exit if an unexpected condition occurs.

match handle1.join() {

Result::Ok(()) => {}

_ => {

return;

}

};

match handle2.join() {

Result::Ok(()) => {}

_ => {

return;

}

};

// Load the value, and assert that it should now be 2.

let val = shared_atomic.load(Ordering::SeqCst);

assert!(val == 2);

}We’ll walk through the verification of this snippet, starting with the planning stage.

In general, the verification of a concurrent program will look something like the following:

- Devise an abstraction of the concurrent program. We want an abstraction that is both easy to reason about on its own, but which is “shardable” enough to capture the concurrent execution of the code.

- Formalize the abstraction as a

tokenized_state_machine!system. Use Verus to check that it is well-formed. - Implement the desired code, “annotating” it with ghost tokens provided by

tokenized_state_machine!.

Devising the abstraction

We’ll explain our abstraction using a (possibly overwrought) analogy.

Let’s imagine that the program is taking place in a classroom, with threads and other concepts personified as students.

To start, we’ll use the chalkboard to represent the counter, so we’ll start by writing a 0 on the chalkboard. Then we’ll ask the student council president to watch the chalkboard and make sure that everybody accessing the chalkboard follows the rules.

The rule is simple: the student can walk up to the chalkboard, and if they have a ticket, they can erase the value and increment it by 1. The student council president will stamp the ticket so the same ticket can’t be used again.

Now: suppose you create two tickets and give them to Alice and Bob. Then, you go take a nap, and when you come back, Alice and Bob both give you two stamped tickets. Now, you go look at the chalkboard. Is it possible to see anything than 2?

No, of course not. It must say 2, since both tickets got stamped, so the chalkboard counter must have incremented 2 times.

There are some implicit assumptions here, naturally. For example, we have to assume that nobody could have forged their own ticket, or their own stamp, or remove stamps…

On the other hand—subject to the players playing by the rules of the game as we laid out—our conclusion that the chalkboard holds the number 2 holds without even making any assumptions about what Alice and Bob did while we were away. For all we know, Alice handed the ticket off to Carol who gave it to Dave, who incremented the counter, who then gave it back to Alice. Maybe Alice and Bob switched tickets. Who knows? It’s all implementation details.

Formalizing the abstraction

It’s time to formalize the above intuition with a tokenized_state_machine!.

The machine is going to have two pieces of state: the counter: int (“the number on the chalkboard”) and the state representing the “tickets”. For the latter, since our example is fixed to the number 2, we’ll represent these as two separate fields, ticket_a: bool and ticket_b: bool, where false means an “unstamped ticket” (i.e., has not incremented the counter) and true represents a “stamped ticket” (i.e., has incremented the counter).

(In the next section we’ll see how to generalize to 2 to n, so we won’t need a separate field for each ticket, but we’ll keep things simple for now.)

Here’s our first (incomplete) attempt at a definition:

tokenized_state_machine!{

X {

fields {

#[sharding(variable)]

pub counter: int,

#[sharding(variable)]

pub ticket_a: bool,

#[sharding(variable)]

pub ticket_b: bool,

}

init!{

initialize() {

init counter = 0; // Initialize “chalkboard” to 0

init ticket_a = false; // Create one “unstamped ticket”

init ticket_b = false; // Create another “unstamped ticket”

}

}

transition!{

do_increment_a() {

require(!pre.ticket_a); // Require the client to provide an “unstamped ticket”

update counter = pre.counter + 1; // Increment the chalkboard counter by 1

update ticket_a = true; // Stamp the ticket

}

}

transition!{

do_increment_b() {

require(!pre.ticket_b); // Require the client to provide an “unstamped ticket”

update counter = pre.counter + 1; // Increment the chalkboard counter by 1

update ticket_b = true; // Stamp the ticket

}

}

readonly!{

finalize() {

require(pre.ticket_a); // Given that both tickets are stamped

require(pre.ticket_b); // ...

assert(pre.counter == 2); // one can conclude the chalkboard value is 2.

}

}

}

}Let’s take this definition one piece at a time. In the fields block, we declared our three states. Note that each one is tagged with a sharding strategy, which tells Verus how to break the state into pieces—we’ll talk about that below. We’ll talk more about the strategies in the next section; right now, all three of our fields use the variable strategy, so we don’t have anything to compare to.

Now we defined four operations on the state machine. The first one is an initialization procedure, named initialize: It lets instantiate the protocol with the counter at 0 and with two unstamped tickets.

The transition do_increment_a lets the client trade in an unstamped ticket for a stamped ticket, while incrementing the counter. The transition do_increment_b is similar, for the ticket_b ticket.

Lastly, we come to the finalize operation. This one is readonly!, as it doesn’t actually update any state. Instead, it lets the client conclude something about the state that we read: just by having the two stamped tickets, we can conclude that the counter value is 2.

Let’s run and see what happens.

error: unable to prove assertion safety condition

--> y.rs:50:17

|

50 | assert(pre.counter == 2);

| ^^^^^^^^^^^^^^^^^^^^^^^^^

error: aborting due to previous error; 1 warning emitted

Uh-oh. Verus wasn’t able to prove the safety condition. Of course not—we didn’t provide any invariant for our system! For all Verus knows, the state {counter: 1, ticket_a: true, ticket_b: true} is valid. Let’s fix this up:

#[invariant]

pub fn main_inv(&self) -> bool {

self.counter == (if self.inc_a { 1 as int } else { 0 }) + (if self.inc_b { 1 as int } else { 0 })

}Our invariant is pretty straightforward: The value of the counter should be equal to the number of stamps. Now, we need to supply stub lemmas to prove that the invariant is preserved by every transition. In this case, Verus completes the proofs easily, so we don’t need to supply any proofs in the lemma bodies to help out Verus.

#[inductive(tr_inc_a)]

fn tr_inc_a_preserves(pre: Self, post: Self) {

}

#[inductive(tr_inc_b)]

fn tr_inc_b_preserves(pre: Self, post: Self) {

}

#[inductive(initialize)]

fn initialize_inv(post: Self) {

}Now that we’ve completed our abstraction, let’s turn towards the implementation.

The Auto-generated Token API

Given a tokenized_state_machine! like the above, Verus will analyze it and produce a series of token types representing pieces of the state, and a series of exchange functions that perform the transitions on the tokens.

(TODO provide instructions for the user to get this information themselves)

Let’s take a look at the tokens that Verus generates here. First, Verus generates an Instance type for instances of the protocol. For the simple state machine here, this doesn’t do very much other than serve as a unique identifier for each instantiation.

#[proof]

#[verifier(unforgeable)]

pub struct Instance { ... }Next, we have token types that represent the actual fields of the state machine. We get one token for each field:

#[proof]

#[verifier(unforgeable)]

pub struct counter {

#[spec] pub instance: X::Instance,

#[spec] pub value: int,

}

#[proof]

#[verifier(unforgeable)]

pub struct ticket_a {

#[spec] pub instance: X::Instance,

#[spec] pub value: bool,

}

#[proof]

#[verifier(unforgeable)]

pub struct ticket_b {

#[spec] pub instance: X::Instance,

#[spec] pub value: bool,

}For example, ownership of a token X::counter { instance: inst, value: 5 } represents proof that the instance inst of the protocol currently has its counter state set to 5. With all three tokens for a given instance taken altogether, we recover the full state.

Now, let’s take a look at the exchange functions. We start with X::Instance::initialize, generated from our declared initialize operation. This function returns a fresh instance of the protocol (X::Instance) and tokens for each field (X::counter, X::ticket_a, and X::ticket_b) all initialized to the values as we declared them (0, false, and false).

impl Instance {

// init!{

// initialize() {

// init counter = 0;

// init ticket_a = false;

// init ticket_b = false;

// }

// }

#[proof]

#[verifier(returns(proof))]

pub fn initialize() -> (X::Instance, X::counter, X::ticket_a, X::ticket_b) {

ensures(|tmp_tuple: (X::Instance, X::counter, X::ticket_a, X::ticket_b)| {

[{

let (instance, token_counter, token_ticket_a, token_ticket_b) = tmp_tuple;

(equal(token_counter.instance, instance))

&& (equal(token_ticket_a.instance, instance))

&& (equal(token_ticket_b.instance, instance))

&& (equal(token_counter.value, 0)) // init counter = 0;

&& (equal(token_ticket_a.value, false)) // init ticket_a = false;

&& (equal(token_ticket_b.value, false)) // init ticket_b = false;

}]

});

...

}Next, the function for the do_increment_a transition. Note that enabling condition in the user’s declared transition

becomes a precondition for calling of do_increment_a. The exchange function takes a X::counter and X::ticket_a token as input,

and since the transition modifies both fields, the exchange function takes the tokens as &mut.

Also note, crucially, that it does not take a X::ticket_b token at all because the transition doesn’t depend on the ticket_b field.

The transition can be performed entirely without reference to it.

// transition!{

// do_increment_a() {

// require(!pre.ticket_a);

// update counter = pre.counter + 1;

// update ticket_a = true;

// }

// }

#[proof]

pub fn do_increment_a(

#[proof] &self,

#[proof] token_counter: &mut X::counter,

#[proof] token_ticket_a: &mut X::ticket_a,

) {

requires([

equal(old(token_counter).instance, (*self)),

equal(old(token_ticket_a).instance, (*self)),

(!old(token_ticket_a).value), // require(!pre.ticket_a)

]);

ensures([

equal(token_counter.instance, (*self)),

equal(token_ticket_a.instance, (*self)),

equal(token_counter.value, old(token_counter).value + 1), // update counter = pre.counter + 1

equal(token_ticket_a.value, true), // update ticket_a = true

]);

...

}The function for the do_increment_b transition is similar:

// transition!{

// do_increment_b() {

// require(!pre.ticket_b);

// update counter = pre.counter + 1;

// update ticket_b = true;

// }

// }

#[proof]

pub fn do_increment_b(

#[proof] &self,

#[proof] token_counter: &mut X::counter,

#[proof] token_ticket_b: &mut X::ticket_b,

) {

requires([

equal(old(token_counter).instance, (*self)),

equal(old(token_ticket_b).instance, (*self)),

(!old(token_ticket_b).value), // require(!pre.ticket_b)

]);

ensures([

equal(token_counter.instance, (*self)),

equal(token_ticket_b.instance, (*self)),

equal(token_counter.value, old(token_counter).value + 1), // update counter = pre.counter + 1

equal(token_ticket_b.value, true), // update ticket_b = true

]);

...

}Finally, we come to the finalize operation. Again this is a “no-op” transition that doesn’t update any fields, so the generated exchange method takes the tokens as readonly parameters (non-mutable borrows). Here, we observe that the assert becomes a post-condition, that is, by performing this operation, though it does not update any state, causes us to learn something about that state.

// readonly!{

// finalize() {

// require(pre.ticket_a);

// require(pre.ticket_b);

// assert(pre.counter == 2);

// }

// }

#[proof]

pub fn finalize(

#[proof] &self,

#[proof] token_counter: &X::counter,

#[proof] token_ticket_a: &X::ticket_a,

#[proof] token_ticket_b: &X::,

) {

requires([

equal(token_counter.instance, (*self)),

equal(token_ticket_a.instance, (*self)),

equal(token_ticket_b.instance, (*self)),

(token_ticket_a.value), // require(pre.ticket_a)

(token_ticket_b.value), // require(pre.ticket_b)

]);

ensures([token_counter.value == 2]); // assert(pre.counter == 2)

...

}

}Writing the verified implementation

To verify the implementation, our plan is to instantiate this ghost protocol and associate

the counter field of the protocol to the atomic memory location we use in our code.

To do this, we’ll use the Verus library atomic_ghost.

Specifically, we’ll use the type atomic_ghost::AtomicU32<X::counter>.

This is a wrapper around an AtomicU32 location which associates it to a tracked

ghost token X::counter.

More specifically, all threads will share this global state:

struct_with_invariants!{

pub struct Global {

// An AtomicU32 that matches with the `counter` field of the ghost protocol.

pub atomic: AtomicU32<_, X::counter, _>,

// The instance of the protocol that the `counter` is part of.

pub instance: Tracked<X::Instance>,

}

spec fn wf(&self) -> bool {

// Specify the invariant that should hold on the AtomicU32<X::counter>.

// Specifically the ghost token (`g`) should have

// the same value as the atomic (`v`).

// Furthermore, the ghost token should have the appropriate `instance`.

invariant on atomic with (instance) is (v: u32, g: X::counter) {

g.instance_id() == instance@.id()

&& g.value() == v as int

}

}

}Note that we track instance as a separate field. This ensures that all threads agree

on which instance of the protocol they are running.

(Keep in mind that whenever we perform a transition on the ghost tokens, all the tokens have to have the same instance. Why does Verus enforce restriction? Because if it did not, then the programmer could instantiate two instances of the protocol, the mix-and-match to get into an invalid state. For example, they could increment the counter twice, using up both “tickets” of that instance, and then use the ticket of another instance to increment it a third time.)

TODO finish writing the explanation

Counting to 2, unverified Rust source

// Ordinary Rust code, not Verus

use std::sync::atomic::{AtomicU32, Ordering};

use std::sync::Arc;

use std::thread::spawn;

fn main() {

// Initialize an atomic variable

let atomic = AtomicU32::new(0);

// Put it in an Arc so it can be shared by multiple threads.

let shared_atomic = Arc::new(atomic);

// Spawn a thread to increment the atomic once.

let handle1 = {

let shared_atomic = shared_atomic.clone();

spawn(move || {

shared_atomic.fetch_add(1, Ordering::SeqCst);

})

};

// Spawn another thread to increment the atomic once.

let handle2 = {

let shared_atomic = shared_atomic.clone();

spawn(move || {

shared_atomic.fetch_add(1, Ordering::SeqCst);

})

};

// Wait on both threads. Exit if an unexpected condition occurs.

match handle1.join() {

Result::Ok(()) => {}

_ => {

return;

}

};

match handle2.join() {

Result::Ok(()) => {}

_ => {

return;

}

};

// Load the value, and assert that it should now be 2.

let val = shared_atomic.load(Ordering::SeqCst);

assert!(val == 2);

}Counting to 2, verified source

use verus_builtin::*;

use verus_builtin_macros::*;

use verus_state_machines_macros::tokenized_state_machine;

use std::sync::Arc;

use vstd::atomic_ghost::*;

use vstd::modes::*;

use vstd::prelude::*;

use vstd::thread::*;

use vstd::{pervasive::*, *};

verus! {

tokenized_state_machine!(

X {

fields {

#[sharding(variable)]

pub counter: int,

#[sharding(variable)]

pub inc_a: bool,

#[sharding(variable)]

pub inc_b: bool,

}

#[invariant]

pub fn main_inv(&self) -> bool {

self.counter == (if self.inc_a { 1 as int } else { 0 }) + (if self.inc_b { 1 as int } else { 0 })

}

init!{

initialize() {

init counter = 0;

init inc_a = false;

init inc_b = false;

}

}

transition!{

tr_inc_a() {

require(!pre.inc_a);

update counter = pre.counter + 1;

update inc_a = true;

}

}

transition!{

tr_inc_b() {

require(!pre.inc_b);

update counter = pre.counter + 1;

update inc_b = true;

}

}

property!{

increment_will_not_overflow_u32() {

assert 0 <= pre.counter < 0xffff_ffff;

}

}

property!{

finalize() {

require(pre.inc_a);

require(pre.inc_b);

assert pre.counter == 2;

}

}

#[inductive(tr_inc_a)]

fn tr_inc_a_preserves(pre: Self, post: Self) {

}

#[inductive(tr_inc_b)]

fn tr_inc_b_preserves(pre: Self, post: Self) {

}

#[inductive(initialize)]

fn initialize_inv(post: Self) {

}

}

);

struct_with_invariants!{

pub struct Global {

// An AtomicU32 that matches with the `counter` field of the ghost protocol.

pub atomic: AtomicU32<_, X::counter, _>,

// The instance of the protocol that the `counter` is part of.

pub instance: Tracked<X::Instance>,

}

spec fn wf(&self) -> bool {

// Specify the invariant that should hold on the AtomicU32<X::counter>.

// Specifically the ghost token (`g`) should have

// the same value as the atomic (`v`).

// Furthermore, the ghost token should have the appropriate `instance`.

invariant on atomic with (instance) is (v: u32, g: X::counter) {

g.instance_id() == instance@.id()

&& g.value() == v as int

}

}

}

fn main() {

// Initialize protocol

let tracked (

Tracked(instance),

Tracked(counter_token),

Tracked(inc_a_token),

Tracked(inc_b_token),

) = X::Instance::initialize();

// Initialize the counter

let tr_instance: Tracked<X::Instance> = Tracked(instance.clone());

let atomic = AtomicU32::new(Ghost(tr_instance), 0, Tracked(counter_token));

let global = Global { atomic, instance: Tracked(instance.clone()) };

let global_arc = Arc::new(global);

// Spawn threads

// Thread 1

let global_arc1 = global_arc.clone();

let join_handle1 = spawn(

(move || -> (new_token: Tracked<X::inc_a>)

ensures

new_token@.instance_id() == instance.id() && new_token@.value() == true,

{

// `inc_a_token` is moved into the closure

let tracked mut token = inc_a_token;

let globals = &*global_arc1;

let _ =

atomic_with_ghost!(&globals.atomic => fetch_add(1);

ghost c => {

globals.instance.borrow().increment_will_not_overflow_u32(&c);

globals.instance.borrow().tr_inc_a(&mut c, &mut token); // atomic increment

}

);

Tracked(token)

}),

);

// Thread 2

let global_arc2 = global_arc.clone();

let join_handle2 = spawn(

(move || -> (new_token: Tracked<X::inc_b>)

ensures

new_token@.instance_id() == instance.id() && new_token@.value() == true,

{

// `inc_b_token` is moved into the closure

let tracked mut token = inc_b_token;

let globals = &*global_arc2;

let _ =

atomic_with_ghost!(&globals.atomic => fetch_add(1);

ghost c => {

globals.instance.borrow().increment_will_not_overflow_u32(&mut c);

globals.instance.borrow().tr_inc_b(&mut c, &mut token); // atomic increment

}

);

Tracked(token)

}),

);

// Join threads

let tracked inc_a_token;

match join_handle1.join() {

Result::Ok(token) => {

proof {

inc_a_token = token.get();

}

},

_ => {

return ;

},

};

let tracked inc_b_token;

match join_handle2.join() {

Result::Ok(token) => {

proof {

inc_b_token = token.get();

}

},

_ => {

return ;

},

};

// Join threads, load the atomic again

let global = &*global_arc;

let x =

atomic_with_ghost!(&global.atomic => load();

ghost c => {

instance.finalize(&c, &inc_a_token, &inc_b_token);

}

);

assert(x == 2);

}

} // verus!Counting to n

Let’s now generalize the previous exercise from using a fixed number of threads (2) to using an an arbitrary number of threads. Specifically, we’ll verify the equivalent of the following Rust program:

- The main thread instantiates a counter to 0.

- The main thread forks

num_threadschild threads.- Each child thread (atomically) increments the counter.

- The main thread joins all the threads (i.e., waits for them to complete).

- The main thread reads the counter.

Our objective: Prove the counter read in the final step has value num_threads.

// Ordinary Rust code, not Verus

use std::sync::atomic::{AtomicU32, Ordering};

use std::sync::Arc;

use std::thread::spawn;

fn do_count(num_threads: u32) {

// Initialize an atomic variable

let atomic = AtomicU32::new(0);

// Put it in an Arc so it can be shared by multiple threads.

let shared_atomic = Arc::new(atomic);

// Spawn `num_threads` threads to increment the atomic once.

let mut handles = Vec::new();

for _i in 0..num_threads {

let handle = {

let shared_atomic = shared_atomic.clone();

spawn(move || {

shared_atomic.fetch_add(1, Ordering::SeqCst);

})

};

handles.push(handle);

}

// Wait on all threads. Exit if an unexpected condition occurs.

for handle in handles.into_iter() {

match handle.join() {

Result::Ok(()) => {}

_ => {

return;

}

};

}

// Load the value, and assert that it should now be `num_threads`.

let val = shared_atomic.load(Ordering::SeqCst);

assert!(val == num_threads);

}

fn main() {

do_count(20);

}Verified implementation

We’ll build off the previous exercise here, so make sure you’re familiar with that one first.

Our first step towards verifying the generalized program

is to update the tokenized_state_machine from the earlier example.

Recall that in that example, we had two boolean fields, inc_a and inc_b,

to represent the two tickets.

This time, we will merely maintain counts of the number of tickets:

we’ll have one field for the number of unstamped tickets and one for the number

of stamped tickets.

Let’s start with the updated state machine, but ignore the tokenization aspect for now. Here’s the updated state machine as an atomic state machine:

state_machine! {

X {

fields {

pub num_threads: nat,

pub counter: int,

pub unstamped_tickets: nat,

pub stamped_tickets: nat,

}

#[invariant]

pub fn main_inv(&self) -> bool {

self.counter == self.stamped_tickets

&& self.stamped_tickets + self.unstamped_tickets == self.num_threads

}

init!{

initialize(num_threads: nat) {

init num_threads = num_threads;

init counter = 0;

init unstamped_tickets = num_threads;

init stamped_tickets = 0;

}

}

transition!{

tr_inc() {

// Replace a single unstamped ticket with a stamped ticket

require(pre.unstamped_tickets >= 1);

update unstamped_tickets = (pre.unstamped_tickets - 1) as nat;

update stamped_tickets = pre.stamped_tickets + 1;

assert(pre.counter < pre.num_threads);

update counter = pre.counter + 1;

}

}

property!{

finalize() {

require(pre.stamped_tickets >= pre.num_threads);

assert(pre.counter == pre.num_threads);

}

}

// ... invariant proofs here

}

}Note that we added a new field, num_threads, and we replaced inc_a and inc_b with unstamped_tickets and stampted_tickets.

Now, let’s talk about the tokenization. In the previous example, all of our fields used the variable strategy, but we never got a chance to talk about what that meant. Let’s now see some of the other strategies.

For our new program, we will need to make two changes.

The constant strategy

First, the num_threads can be marked as a constant, since this value is really just

a parameter to the protocol, and will be fixed for any given instance of it.

By marking it constant, we won’t get a token for it, but instead the value will be available

from the shared Instance object.

The count strategy

This change is far more subtle. The key problem we need to solve is that the “tickets” need

to be spread across multiple threads. However, if unstamped_tickets and stamped_tickets

were marked as variable then we would only get one token for each field.

Recall our physical analogy with the tickets and the chalkboard (used for the counter field),

and compare: there’s actually something fundamentally different about the tickets

and the chalkboard, which is that the tickets are actually a count of something.

Think of it this way:

- If Alice has 3 tickets, and Bob has 2 tickets, then together they have 5 tickets.

- If Alice has a chalkboard with the number 3 written on it, and Bob has a chalkboard with the number 2 on it, then together do they have a chalkboard with the number 5 written on it?

- No! They just have two chalkboards with 2 and 3 written on them. In fact, in our scenario, we aren’t even supposed to have more than 1 chalkboard anyway. Alice and Bob are in an invalid state here.

We need a way to mark this distinction, that is, we need a way to be able to say,

“this field is a value on a chalkboard” versus “the field indicates the number of some thing”.

The variable strategy we have been using until now is the former;

the newly introduce count strategy is the latter.

Thus, we need to use the count strategy for the ticket fields.

We need to mark the ticket fields as being a “count” of something, and this is exactly

what the count strategy is for. Rather than having exactly one token for the

field value, the count strategy will make it so that the field value is

the sum total of all the tokens in the system

associated with that field. (However, this new flexibility will come

with some restrictions, as we will see.)

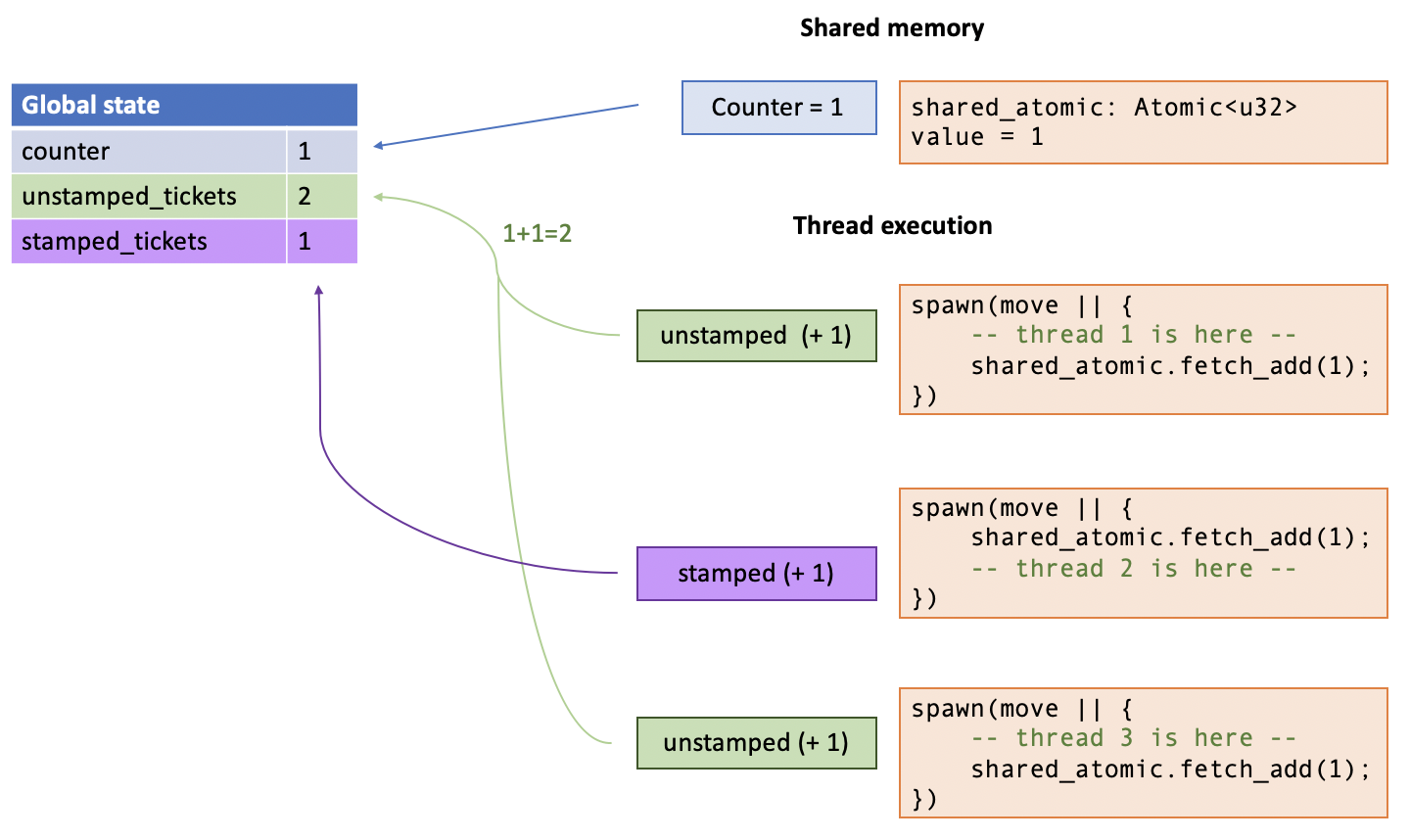

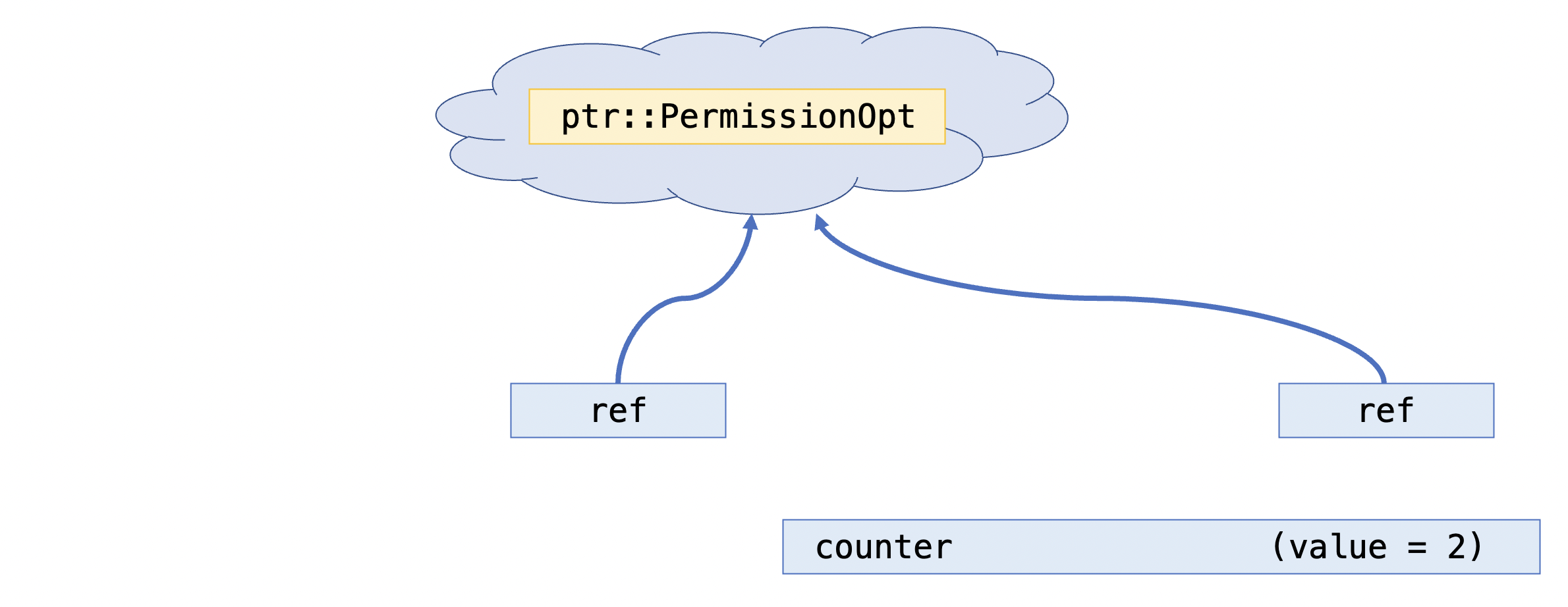

Here, we can visualize how the ghost tokens are spread throughout the running system, and how they relate to the global state:

In this scenario, we have three threads, all of which are currenlty executing,

where thread 2 has incremented the counter, but threads 1 and 3 have not.

Thus, threads 1 and 3 each have an “unstamped ticket” token,

while thread 2 has a “stamped ticket” token.

The abstract global state, then, has

unstamped_tickets == 2 and stamped_tickets == 1.

Building the new tokenized_state_machine

First, we mark the fields with the appropriate strategies, as we discussed:

tokenized_state_machine!{

X {

fields {

#[sharding(constant)]

pub num_threads: nat,

#[sharding(variable)]

pub counter: int,

#[sharding(count)]

pub unstamped_tickets: nat,

#[sharding(count)]

pub stamped_tickets: nat,

}Just by marking the strategies, we can already see how it impacts the generated token code.

Let’s look at unstamped_tickets (the stamped_tickets is, of course, similar):

// Code auto-generated by tokenized_state_machine! macro

#[proof]

#[verifier(unforgeable)]

pub struct unstamped_tickets {

#[spec] pub instance: Instance,

#[spec] pub value: nat,

}

impl unstamped_tickets {

#[proof]

#[verifier(returns(proof))]

pub fn join(#[proof] self, #[proof] other: Self) -> Self {

requires(equal(self.instance, other.instance));

ensures(|s: Self| {

equal(s.instance, self.instance)

&& equal(s.value, self.value + other.value)

});

// ...

}

#[proof]

#[verifier(returns(proof))]

pub fn split(#[proof] self, #[spec] i: nat) -> (Self, Self) {

requires(i <= self.value);

ensures(|s: (Self, Self)| {

equal(s.0.instance, self.instance)

&& equal(s.1.instance, self.instance)

&& equal(s.0.value, i)

&& equal(s.1.value, self.value - i)

});

// ...

}

}The token type, unstamped_tickets comes free with 2 associated methods,

join and split. First, join lets us take two tokens with an associate count and

merge them together to get a single token with the combined count value;

meanwhile, split goes the other way.

So for example, when we start out with a single token of count num_threads, we can

split into num_threads tokens, each with count 1.

Now, let’s move on to the rest of the system.

Our invariant and the initialization routine will be identical to before.

(In general, init statements are used for all sharding strategies.

The sharding strategies might affect the token method that gets generated, but the

init! definition itself will remain the same.)

#[invariant]

pub fn main_inv(&self) -> bool {

self.counter == self.stamped_tickets

&& self.stamped_tickets + self.unstamped_tickets == self.num_threads

}

init!{

initialize(num_threads: nat) {

init num_threads = num_threads;

init counter = 0;

init unstamped_tickets = num_threads;

init stamped_tickets = 0;

}

}The tr_inc definition is where it gets interesting. Let’s take a closer look at the definition

we gave earlier:

transition!{

tr_inc() {

// Replace a single unstamped ticket with a stamped ticket

require(pre.unstamped_tickets >= 1);

update unstamped_tickets = pre.unstamped_tickets - 1;

update stamped_tickets = pre.stamped_tickets + 1;

assert(pre.counter < pre.num_threads);

update counter = pre.counter + 1;

}

} There’s a problem here, which is that the operation directly accesses pre.unstamped_tickets

and writes to the same field with an update statement, and likewise for the

stamped_tickets field. Because these fields are marked with the count strategy,

Verus will reject this definition.

So why does Verus have to reject it? Keep in mind that whatever definition we give here, Verus has to be able to create a transition definition that works in the tokenized view of the world. In any tokenized transition, the tokens that serve as input must by themselves be able to justify the validity of the transition being performed.

Unfortunately, this justification is impossible when we are using the count strategy.

When the field is tokenized according to the count strategy,

there is no way for a client to produce a set of

tokens that definitively determines the value of the unstamped_tickets field in the global state machine.

For instance, suppose the client provides three such tokens; this does not necessarily means

that pre.unstamped_tickets is equal to 3! Rather, there might be other tokens

elsewhere in the system held on by other threads, so all we can justify from the existence

of those tokens is that pre.unstamped_tickets is greater than or equal to 3.

Thus, Verus demands that we do not read or write to the field arbitrarily.

Effectively, we can only perform operations that look like one of the following for a count-strategy field:

- Require the field’s value to be greater than some natural number

- Subtract a natural number

- Add a natural number

Luckily, we can see that the transition from earlier only does these allowed things. To get Verus to accept it, we only need to write the transition using a special syntax so that it can identify the patterns involved.

transition!{

tr_inc() {

// Equivalent to:

// require(pre.unstamped_tickets >= 1);

// update unstampted_tickets = pre.unstamped_tickets - 1

// (In any `remove` statement, the `>=` condition is always implicit.)

remove unstamped_tickets -= (1);

// Equivalent to:

// update stamped_tickets = pre.stamped_tickets + 1

add stamped_tickets += (1);

// These still use ordinary 'update' syntax, because `pre.counter`

// uses the `variable` sharding strategy.

assert(pre.counter < pre.num_threads);

update counter = pre.counter + 1;

}

}Generally, a remove corresponds to destroying a token, while add corresponds to creating a token. Thus the generated exchange function takes an unstamped_tickets token as input

and gives a stamped_tickets token as output.

Ultimately, thus, it exchanges an unstamped ticket for a stamped ticket.

// Code auto-generated by tokenized_state_machine! macro

#[proof]

#[verifier(returns(proof))]

pub fn tr_inc(

#[proof] &self,

#[proof] token_counter: &mut counter,

#[proof] token_0_unstamped_tickets: unstamped_tickets, // input (destroyed)

) -> stamped_tickets // output (created)

{

requires([

equal(old(token_counter).instance, (*self)),

equal(token_0_unstamped_tickets.instance, (*self)),

equal(token_0_unstamped_tickets.value, 1), // remove unstamped_tickets -= (1);

]);

ensures(|token_1_stamped_tickets: stamped_tickets| {

[

equal(token_counter.instance, (*self)),

equal(token_1_stamped_tickets.instance, (*self)),

equal(token_1_stamped_tickets.value, 1), // add stamped_tickets += (1);

(old(token_counter).value < (*self).num_threads()), // assert(pre.counter < pre.num_threads);

equal(token_counter.value, old(token_counter).value + 1), // update counter = pre.counter + 1;

]

});

// ...

}The finalize transition needs to be updated in a similar way:

property!{

finalize() {

// Equivalent to:

// require(pre.unstamped_tickets >= pre.num_threads);

have stamped_tickets >= (pre.num_threads);

assert(pre.counter == pre.num_threads);

}

}The have statement is similar to remove, except that it doesn’t do the remove.

It just requires the client to provide tokens counting in total at least pre.num_threads here,

but it doesn’t consume them.

Again, notice that the condition we have to write is equivalent to a >= condition.

We can’t just require that stamped_tickets == pre.num_threads.

(Incidentally, it does happen to be the case that pre.stamped_tickets >= pre.num_threads

implies that pre.stamped_tickets == pre.num_threads. This implication follows

from the the invariant, but it isn’t something we know a priori.

Therefore, it is still the case that we have to write the transition with the weaker requirement

that pre.stamped_tickets >= pre.num_threads. The safety proof will then deduce that

pre.stamped_tickets == pre.num_threads from the invariant, and then deduce that

pre.counter == pre.num_threads, which is what we really want in the end.)

// Code auto-generated by tokenized_state_machine! macro

#[proof]

pub fn finalize(

#[proof] &self,

#[proof] token_counter: &counter,

#[proof] token_0_stamped_tickets: &stamped_tickets,

) {

requires([

equal(token_counter.instance, (*self)),

equal(token_0_stamped_tickets.instance, (*self)),

equal(token_0_stamped_tickets.value, (*self).num_threads()), // have stamped_tickets >= (pre.num_threads);

]);

ensures([(token_counter.value == (*self).num_threads())]); // assert(pre.counter == pre.num_threads);

// ...

}Verified Implementation

The implementation of a thread’s action hasn’t change much from before. The only difference

is that we are now

exchanging an unstamped_ticket for a stamped_ticket, rather than updating a

boolean field.

Perhaps more interesting now is the main function which does the spawning and joining.

It has to spawn threads in a loop. Note that we start with a stamped_tokens count of

num_threads. Each iteration of the loop, we “peel off” a single ticket (1 unit’s worth)

and pass it into the newly spawned thread.

// Spawn threads

let mut join_handles: Vec<JoinHandle<Tracked<X::stamped_tickets>>> = Vec::new();

let mut i = 0;

while i < num_threads

invariant

0 <= i,

i <= num_threads,

unstamped_tokens.count() + i == num_threads,

unstamped_tokens.instance_id() == instance.id(),

join_handles@.len() == i as int,

forall|j: int, ret|

0 <= j && j < i ==> join_handles@.index(j).predicate(ret) ==>

ret@.instance_id() == instance.id()

&& ret@.count() == 1,

(*global_arc).wf(),

(*global_arc).instance@ === instance,

{

let tracked unstamped_token;

proof {

unstamped_token = unstamped_tokens.split(1 as nat);

}

let global_arc = global_arc.clone();

let join_handle = spawn(

(move || -> (new_token: Tracked<X::stamped_tickets>)

ensures

new_token@.instance_id() == instance.id(),

new_token@.count() == 1,

{

let tracked unstamped_token = unstamped_token;

let globals = &*global_arc;

let tracked stamped_token;

let _ =

atomic_with_ghost!(

&global_arc.atomic => fetch_add(1);

update prev -> next;

returning ret;

ghost c => {

stamped_token =

global_arc.instance.borrow().tr_inc(&mut c, unstamped_token);

}

);

Tracked(stamped_token)

}),

);

join_handles.push(join_handle);

i = i + 1;

}Then, when we join the threads, we do the opposite: we collect the “stamped ticket” tokens

until we have collected all num_threads of them.

// Join threads

let mut i = 0;

while i < num_threads

invariant

0 <= i,

i <= num_threads,

stamped_tokens.count() == i,

stamped_tokens.instance_id() == instance.id(),

join_handles@.len() as int + i as int == num_threads,

forall|j: int, ret|

0 <= j && j < join_handles@.len() ==>

#[trigger] join_handles@.index(j).predicate(ret) ==>

ret@.instance_id() == instance.id()

&& ret@.count() == 1,

(*global_arc).wf(),

(*global_arc).instance@ === instance,

{

let join_handle = join_handles.pop().unwrap();

match join_handle.join() {

Result::Ok(token) => {

proof {

stamped_tokens.join(token.get());

}

},

_ => {

return ;

},

};

i = i + 1;

}See the full verified source for more detail.

Counting to n, unverified Rust source

// Ordinary Rust code, not Verus

use std::sync::atomic::{AtomicU32, Ordering};

use std::sync::Arc;

use std::thread::spawn;

fn do_count(num_threads: u32) {

// Initialize an atomic variable

let atomic = AtomicU32::new(0);

// Put it in an Arc so it can be shared by multiple threads.

let shared_atomic = Arc::new(atomic);

// Spawn `num_threads` threads to increment the atomic once.

let mut handles = Vec::new();

for _i in 0..num_threads {

let handle = {

let shared_atomic = shared_atomic.clone();

spawn(move || {

shared_atomic.fetch_add(1, Ordering::SeqCst);

})

};

handles.push(handle);

}

// Wait on all threads. Exit if an unexpected condition occurs.

for handle in handles.into_iter() {

match handle.join() {

Result::Ok(()) => {}

_ => {

return;

}

};

}

// Load the value, and assert that it should now be `num_threads`.

let val = shared_atomic.load(Ordering::SeqCst);

assert!(val == num_threads);

}

fn main() {

do_count(20);

}Counting to n, verified source

use verus_state_machines_macros::tokenized_state_machine;

use std::sync::Arc;

use vstd::atomic_ghost::*;

use vstd::modes::*;

use vstd::prelude::*;

use vstd::thread::*;

use vstd::{pervasive::*, prelude::*, *};

verus! {

tokenized_state_machine!{

X {

fields {

#[sharding(constant)]

pub num_threads: nat,

#[sharding(variable)]

pub counter: int,

#[sharding(count)]

pub unstamped_tickets: nat,

#[sharding(count)]

pub stamped_tickets: nat,

}

#[invariant]

pub fn main_inv(&self) -> bool {

self.counter == self.stamped_tickets

&& self.stamped_tickets + self.unstamped_tickets == self.num_threads

}

init!{

initialize(num_threads: nat) {

init num_threads = num_threads;

init counter = 0;

init unstamped_tickets = num_threads;

init stamped_tickets = 0;

}

}

transition!{

tr_inc() {

// Equivalent to:

// require(pre.unstamped_tickets >= 1);

// update unstampted_tickets = pre.unstamped_tickets - 1

// (In any `remove` statement, the `>=` condition is always implicit.)

remove unstamped_tickets -= (1);

// Equivalent to:

// update stamped_tickets = pre.stamped_tickets + 1

add stamped_tickets += (1);

// These still use ordinary 'update' syntax, because `pre.counter`

// uses the `variable` sharding strategy.

assert(pre.counter < pre.num_threads);

update counter = pre.counter + 1;

}

}

property!{

finalize() {

// Equivalent to:

// require(pre.unstamped_tickets >= pre.num_threads);

have stamped_tickets >= (pre.num_threads);

assert(pre.counter == pre.num_threads);

}

}

#[inductive(initialize)]

fn initialize_inductive(post: Self, num_threads: nat) { }

#[inductive(tr_inc)]

fn tr_inc_preserves(pre: Self, post: Self) {

}

}

}

struct_with_invariants!{

pub struct Global {

pub atomic: AtomicU32<_, X::counter, _>,

pub instance: Tracked<X::Instance>,

}

spec fn wf(&self) -> bool {

invariant on atomic with (instance) is (v: u32, g: X::counter) {

g.instance_id() == instance@.id()

&& g.value() == v as int

}

predicate {

self.instance@.num_threads() < 0x100000000

}

}

}

fn do_count(num_threads: u32) {

// Initialize protocol

let tracked (

Tracked(instance),

Tracked(counter_token),

Tracked(unstamped_tokens),

Tracked(stamped_tokens),

) = X::Instance::initialize(num_threads as nat);

// Initialize the counter

let tracked_instance = Tracked(instance.clone());

let atomic = AtomicU32::new(Ghost(tracked_instance), 0, Tracked(counter_token));

let global = Global { atomic, instance: tracked_instance };

let global_arc = Arc::new(global);

// Spawn threads

let mut join_handles: Vec<JoinHandle<Tracked<X::stamped_tickets>>> = Vec::new();

let mut i = 0;

while i < num_threads

invariant

0 <= i,

i <= num_threads,

unstamped_tokens.count() + i == num_threads,

unstamped_tokens.instance_id() == instance.id(),

join_handles@.len() == i as int,

forall|j: int, ret|

0 <= j && j < i ==> join_handles@.index(j).predicate(ret) ==>

ret@.instance_id() == instance.id()

&& ret@.count() == 1,

(*global_arc).wf(),

(*global_arc).instance@ === instance,

{

let tracked unstamped_token;

proof {

unstamped_token = unstamped_tokens.split(1 as nat);

}

let global_arc = global_arc.clone();

let join_handle = spawn(

(move || -> (new_token: Tracked<X::stamped_tickets>)

ensures

new_token@.instance_id() == instance.id(),

new_token@.count() == 1,

{

let tracked unstamped_token = unstamped_token;

let globals = &*global_arc;

let tracked stamped_token;

let _ =

atomic_with_ghost!(

&global_arc.atomic => fetch_add(1);

update prev -> next;

returning ret;

ghost c => {

stamped_token =

global_arc.instance.borrow().tr_inc(&mut c, unstamped_token);

}

);

Tracked(stamped_token)

}),

);

join_handles.push(join_handle);

i = i + 1;

}

// Join threads

let mut i = 0;

while i < num_threads

invariant

0 <= i,

i <= num_threads,

stamped_tokens.count() == i,

stamped_tokens.instance_id() == instance.id(),

join_handles@.len() as int + i as int == num_threads,

forall|j: int, ret|

0 <= j && j < join_handles@.len() ==>

#[trigger] join_handles@.index(j).predicate(ret) ==>

ret@.instance_id() == instance.id()

&& ret@.count() == 1,

(*global_arc).wf(),

(*global_arc).instance@ === instance,

{

let join_handle = join_handles.pop().unwrap();

match join_handle.join() {

Result::Ok(token) => {

proof {

stamped_tokens.join(token.get());

}

},

_ => {

return ;

},

};

i = i + 1;

}

let global = &*global_arc;

let x =

atomic_with_ghost!(&global.atomic => load();

ghost c => {

instance.finalize(&c, &stamped_tokens);

}

);

assert(x == num_threads);

}

fn main() {

do_count(20);

}

} // verus!Single-Producer, Single-Consumer queue, tutorial

Here, we’ll walk through an example of verifying a single-producer, single-consumer queue. Specifically, we’re interested in the following interface:

type Producer<T>

type Consumer<T>

impl<T> Producer<T> {

pub fn enqueue(&mut self, t: T)

}

impl<T> Consumer<T> {

pub fn dequeue(&mut self) -> T

}

pub fn new_queue<T>(len: usize) -> (Producer<T>, Consumer<T>)Unverified implementation

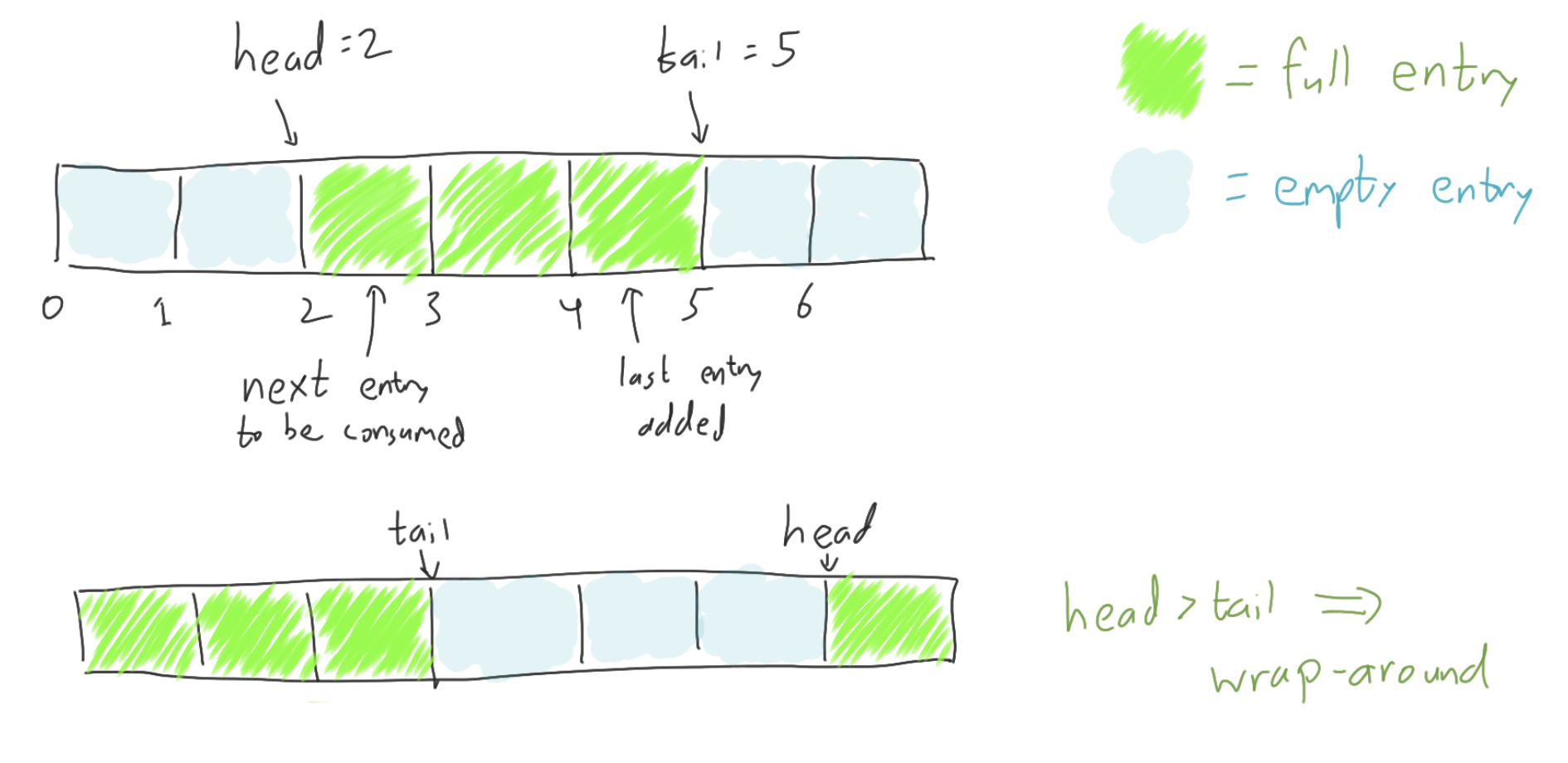

First, let’s discuss the reference implementation, written in ordinary Rust, that we’re going to be verifying (an equivalent of).

The implementation is going to use a ring buffer of fixed length len,

with a head and a tail pointer,

with the producer adding new entries to the tail and

the consumer popping old entries from the head.

Thus the entries in the range [head, tail) will always be full.

If head == tail then the ring buffer will be considered empty,

and if head > tail, then the interval wraps around.

The crucial design choice is what data structure to use for the buffer itself. The key requirements of the buffer are:

- A reference to the buffer memory must be shared between threads (the producer and the consumer)

- Each entry might or might not store a valid

Telement at any given point in time.

In our unverified Rust implementation, we’ll let each entry be an UnsafeCell.

The UnsafeCell gives us interior mutability, so that we can

read and write the contents from multiple threads without

any extra metadata associated to each entry. UnsafeCell is of course, as the name suggests, unsafe, meaning that it’s up to the programmer to ensure the all these reads and writes are performed safely. For our purposes, safely mostly means data-race-free.

More specifically, we’ll use an UnsafeCell<MaybeUninit<T>> for each entry.

The MaybeUninit

allows for the possibility that an entry is uninitialized. Like with UnsafeCell, there are no

runtime safety checks, so it is entirely upon the programmer to make sure it doesn’t try to read

from the entry when it’s uninitialized.

Hang on, why not just use

Option<T>?To be safer, we could use an

Option<T>instead ofMaybeUninit<T>, but we are already doing low-level data management anyway, and anOption<T>would be less efficient. In particular, if we used anOption<T>, then popping an entry out of the queue would mean we having to writeNoneback into the queue to signify its emptiness. WithMaybeUninit<T>, we can just move theTout and leave the memory “uninitialized” without actually having to overwrite its bytes.)

So the buffer will be represented by UnsafeCell<MaybeUninit<T>>.

We’ll also use atomics to represent the head and tail.

struct Queue<T> {

buffer: Vec<UnsafeCell<MaybeUninit<T>>>,

head: AtomicU64,

tail: AtomicU64,

}The producer and consumer types will each have a reference to the queue.

Also, the producer will have a redundant copy of the tail (which slightly reduces contended

access to the shared atomic tail), and likewise,

the consumer will have a redundant copy of the head.

(This is possible because we only have a single producer and consumer each

the producer is the only entity that ever updates the tail and

the consumer is the only entity that ever updates the head.)

pub struct Producer<T> {

queue: Arc<Queue<T>>,

tail: usize,

}

pub struct Consumer<T> {

queue: Arc<Queue<T>>,

head: usize,

}Finally, we come to the actual implementation:

pub fn new_queue<T>(len: usize) -> (Producer<T>, Consumer<T>) {

// Create a vector of UnsafeCells to serve as the ring buffer

let mut backing_cells_vec = Vec::<UnsafeCell<MaybeUninit<T>>>::new();

while backing_cells_vec.len() < len {

let cell = UnsafeCell::new(MaybeUninit::uninit());

backing_cells_vec.push(cell);

}

// Initialize head and tail to 0 (empty)

let head_atomic = AtomicU64::new(0);

let tail_atomic = AtomicU64::new(0);

// Package it all into a queue object, and make a reference-counted pointer to it

// so it can be shared by the Producer and the Consumer.

let queue = Queue::<T> { head: head_atomic, tail: tail_atomic, buffer: backing_cells_vec };

let queue_arc = Arc::new(queue);

let prod = Producer::<T> { queue: queue_arc.clone(), tail: 0 };

let cons = Consumer::<T> { queue: queue_arc, head: 0 };

(prod, cons)

}

impl<T> Producer<T> {

pub fn enqueue(&mut self, t: T) {

// Loop: if the queue is full, then block until it is not.

loop {

let len = self.queue.buffer.len();

// Calculate the index of the slot right after `tail`, wrapping around

// if necessary. If the enqueue is successful, then we will be updating

// the `tail` to this value.

let next_tail = if self.tail + 1 == len { 0 } else { self.tail + 1 };

// Get the current `head` value from the shared atomic.

let head = self.queue.head.load(Ordering::SeqCst);

// Check to make sure there is room. (We can't advance the `tail` pointer

// if it would become equal to the head, since `tail == head` denotes

// an empty state.)

// If there's no room, we'll just loop and try again.

if head != next_tail as u64 {

// Here's the unsafe part: writing the given `t` value into the `UnsafeCell`.

unsafe {

(*self.queue.buffer[self.tail].get()).write(t);

}

// Update the `tail` (both the shared atomic and our local copy).

self.queue.tail.store(next_tail as u64, Ordering::SeqCst);

self.tail = next_tail;

// Done.

return;

}

}

}

}

impl<T> Consumer<T> {

pub fn dequeue(&mut self) -> T {

// Loop: if the queue is empty, then block until it is not.

loop {

let len = self.queue.buffer.len();

// Calculate the index of the slot right after `head`, wrapping around

// if necessary. If the enqueue is successful, then we will be updating

// the `head` to this value.

let next_head = if self.head + 1 == len { 0 } else { self.head + 1 };

// Get the current `tail` value from the shared atomic.

let tail = self.queue.tail.load(Ordering::SeqCst);

// Check to see if the queue is nonempty.

// If it's empty, we'll just loop and try again.

if self.head as u64 != tail {

// Load the stored message from the UnsafeCell

// (replacing it with "uninitialized" memory).

let t = unsafe {

let mut tmp = MaybeUninit::uninit();

std::mem::swap(&mut *self.queue.buffer[self.head].get(), &mut tmp);

tmp.assume_init()

};

// Update the `head` (both the shared atomic and our local copy).

self.queue.head.store(next_head as u64, Ordering::SeqCst);

self.head = next_head;

// Done. Return the value we loaded out of the buffer.

return t;

}

}

}

}Verified implementation

With verification, we always need to start with the question of what, exactly, we are

verifying. In this case, we aren’t actually going to add any new specifications to

the enqueue or dequeue functions; our only aim is to implement the queue and

show that it is memory-type-safe, e.g., that dequeue returns a well-formed T value without

exhibiting any undefined behavior.

Showing this is not actually trivial! The unverified Rust code above used unsafe code,

which means memory-type-safety is not a given.

In our verified queue implementation, we will need a safe, verified alternative to the

unsafe code. We’ll start with introducing our verified alternative to UnsafeCell<MaybeUninit<T>>.

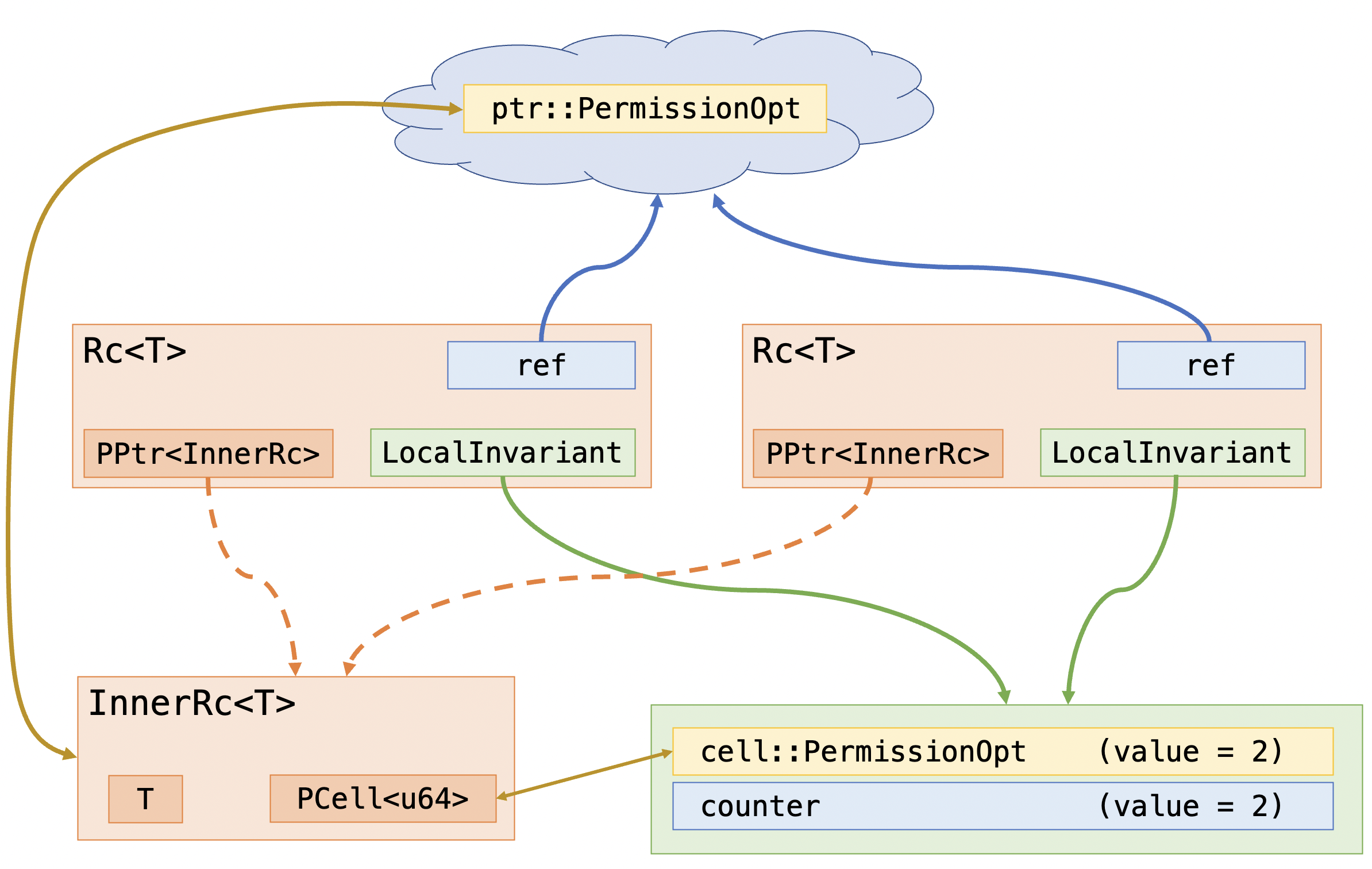

Verified interior mutability with PCell

In Verus, PCell (standing for “permissioned cell”) is the verified equivalent.

(By equivalent, we mean that, subject to inlining and the optimizations doing what we expect,

it ought to generate the same machine code.)

Unlike UnsafeCell, the optional-initializedness is built-in, so PCell<T> can stand in

for UnsafeCell<MaybeUninit<T>>.

In order to verify our use of PCell, Verus requires the user to present a special permission token

on each access (read or write) to the PCell. Each PCell has a unique identifier (given by pcell.id())

and each permission token connects an identifier to the (possibly uninitialized value) stored at the cell.

In the permission token, this value is represented as an Option type, though the option tag has no runtime representation.

fn main() {

// Construct a new pcell and obtain the permission for it.

let (pcell, Tracked(mut perm)) = PCell::<u64>::empty();

// Initially, cell is unitialized, and the `perm` token

// represents that as the value `MemContents::Uninit`.

// The meaning of the permission token is given by its _view_, here `perm@`.

//

// The expression `pcell_opt![ pcell.id() => MemContents::Uninit ]` can be read as roughly,

// "the cell with value pcell.id() is uninitialized".

assert(perm@ === pcell_points![ pcell.id() => MemContents::Uninit ]);

// The above could also be written by accessing the fields of the

// `PointsToData` struct:

assert(perm.id() === pcell.id());

assert(perm.mem_contents() === MemContents::Uninit);

// We can write a value to the pcell (thus initializing it).

// This only requires an `&` reference to the PCell, but it does

// mutate the `perm` token.

pcell.put(Tracked(&mut perm), 5);

// Having written the value, this is reflected in the token:

assert(perm@ === pcell_points![ pcell.id() => MemContents::Init(5) ]);

// We can take the value back out:

let x = pcell.take(Tracked(&mut perm));

// Which leaves it uninitialized again:

assert(x == 5);

assert(perm@ === pcell_points![ pcell.id() => MemContents::Uninit ]);

}After erasure, the above code reduces to something like this:

fn main() {

let pcell = PCell::<u64>::empty();

pcell.put(5);

let x = pcell.take();

}Using PCell in a verified queue.

Let’s look back at the Rust code from above (the code that used UnsafeCell) and mark six points of interest:

four places where we manipulate an atomic and two where we manipulate a cell:

impl<T> Producer<T> {

pub fn enqueue(&mut self, t: T) {

loop {

let len = self.queue.buffer.len();

let next_tail = if self.tail + 1 == len

{ 0 } else { self.tail + 1 };

let head = self.queue.head.load(Ordering::SeqCst); + produce_start (atomic load)

+

if head != next_tail as u64 { +

unsafe { +

(*self.queue.buffer[self.tail].get()).write(t); + write to cell

} +

+

self.queue.tail.store(next_tail as u64, Ordering::SeqCst); + produce_end (atomic store)

self.tail = next_tail;

return;

}

}

}

}

impl<T> Consumer<T> {

pub fn dequeue(&mut self) -> T {

loop {

let len = self.queue.buffer.len();

let next_head = if self.head + 1 == len

{ 0 } else { self.head + 1 };

let tail = self.queue.tail.load(Ordering::SeqCst); + consume_start (atomic load)

+

if self.head as u64 != tail { +

let t = unsafe { +

let mut tmp = MaybeUninit::uninit(); +

std::mem::swap( + read from cell

&mut *self.queue.buffer[self.head].get(), +

&mut tmp); +

tmp.assume_init() +

}; +

+

self.queue.head.store(next_head as u64, Ordering::SeqCst); + consume_end (atomic store)

self.head = next_head;

return t;

}

}

}

}Now, if we’re going to be using a PCell instead of an UnsafeCell, then at the two points where we manipulate the cell,

we are somehow going to need to have the permission token at those points.

Furthermore, we have four points that manipulate atomics. Informally, these atomic operations let us synchronize access to the cell in a data-race-free way. Formally, in the verified setting, these atomics will let us transfer control of the permission tokens that we need to access the cells.

Specifically:

enqueueneeds to obtain the permission atproduce_start, use it to perform a write, and relinquish it atproduce_end.dequeueneeds to obtain the permission atconsume_start, use it to perform a read, and relinquish it atconsume_end.

Woah, woah, woah. Why is this so complicated? We marked the 6 places of interest, so now let’s go build a

tokenized_state_machine!with those 6 transitions already!Good question. That approach might have worked if we were using an atomic to store the value

Tinstead of aPCell(although this would, of course, require theTto be word-sized).However, the

PCellrequires its own considerations. The crucial point is that reading or writing toPCellis non-atomic. That sounds tautological, but I’m not just talking about the name of the type here. By atomic or non-atomic, I’m actually referring to the atomicity of the operation in the execution model. We can’t freely abstractPCelloperations as atomic operations that allow arbitrary interleaving.Okay, so what would go wrong in Verus if we tried?

Recall how it works for atomic memory locations. With an atomic memory location, we can access it any time if we just have an atomic invariant for it. We open the invariant (acquiring permission to access the value along with any ghost data), perform the operation, which is atomic, and then close the invariant. In this scenario, the invariant is restored after a single atomic operation, as is necessary.

But we can’t do the same for

PCell.PCelloperations are non-atomic, so we can’t perform aPCellread or write while an atomic invariant is open. Thus, thePCell’s permission token needs to be transferred at the points in the program where we are performing atomic operations, that is, at the four marked atomic operations.

Abstracting the program while handling permissions

Verus’s tokenized_state_machine! supports a notion called storage.

An instance of a protocol is allowed to store tokens, which client threads can operate on

by temporarily “checking them out”. This provides a convenient means for transferring ownership of

tokens through the system.

Think of the instance as like a library. (Actually, think of it like a network of libraries with an inter-library loan system, where the librarians tirelessly follow a protocol to make sure any given library has a book whenever a patron is ready to check a book out.)

Anyway, the tokenized_state_machine! we’ll use here should look something like this:

- It should be able to store all the permission tokens for each

PCellin the buffer. - It should have 4 transitions:

produce_startshould allow the producer to “check out” the permission for the cell that it is ready to write to.produce_endshould allow the producer to “check back in” the permission that it checked out.consume_startshould allow the consumer to “check out” the permission for the cell that it is ready to read from.consume_endshould allow the consumer to “check back in” the permission that it checked out.

Hang on, I read ahead and learned that Verus’s storage mechanism has a different mechanism for handling reads. Why aren’t we using that for the consumer?

A few reasons.

- That mechanism is useful specifically for read-sharing, which we aren’t using here.

- We actually can’t use it, since the “read” isn’t really a read. Well, at the byte-level, it should just be a read. But we actually do change the value that is stored there in the high-level program semantics: we move the value out and replaced it with an “uninitialized” value. And we have to do it this way, unless

TimplementedCopy.

The producer and consumer each have a small “view into the world”: each one might have access to one of the permission tokens if they have it “checked out”, but that’s it.

To understand the state machine protocol we’re going to build, we have to look at the protocol dually to the producer and the consumer. If the producer and consumer might have 0 or 1 permissions checked out at a given time, then complementarily, the protocol state should be “almost all of the permissions, except possibly up to 2 permissions that are currently checked out”.

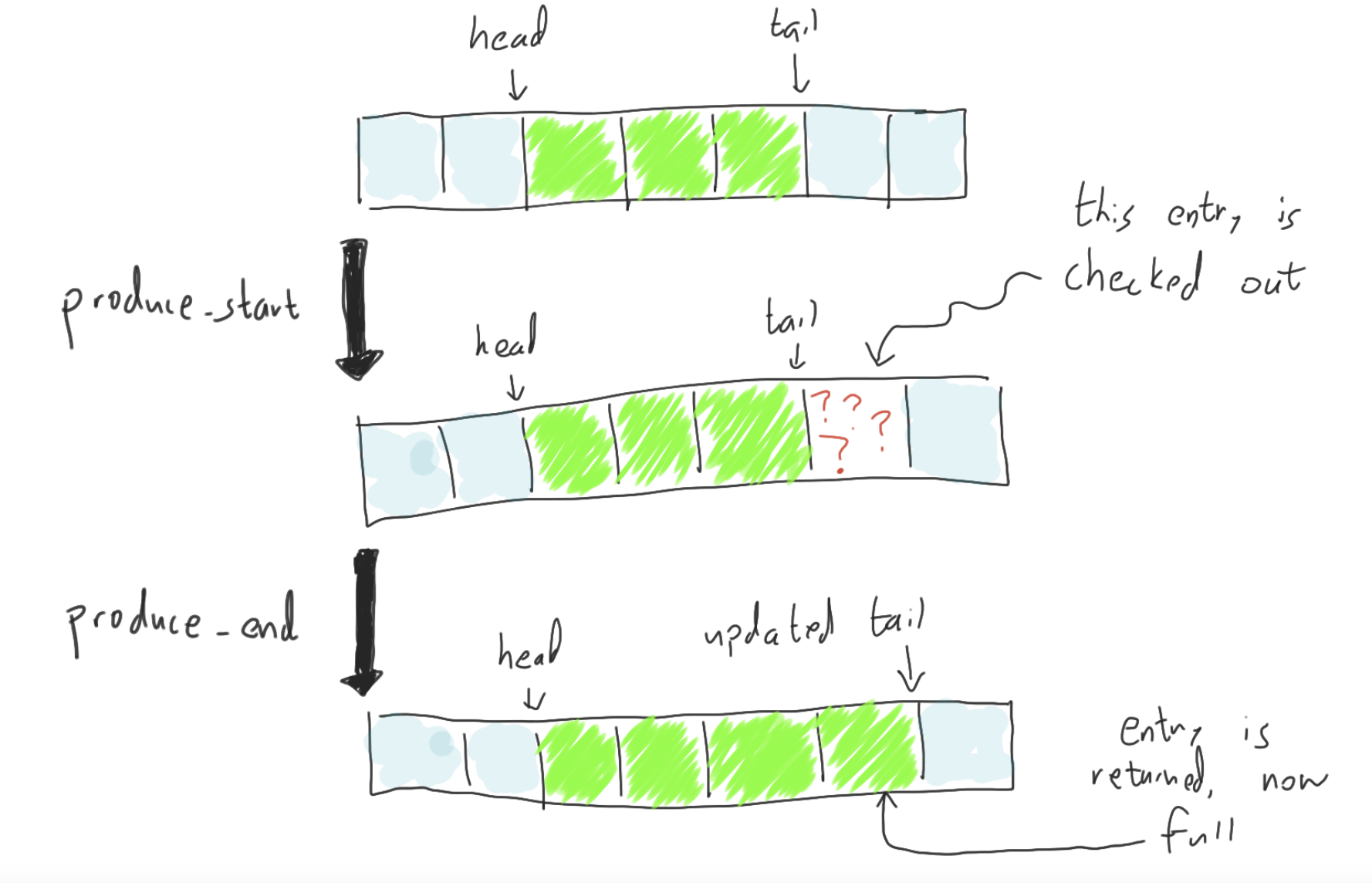

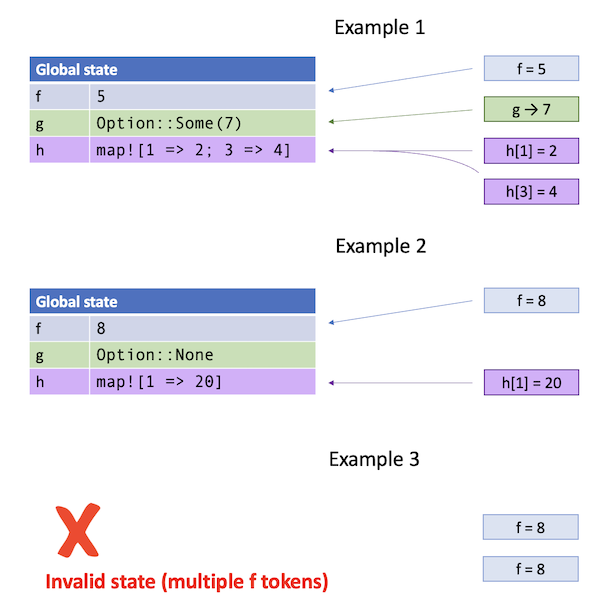

For example, here is the full sequence of operations for an enqueue step, both from the perspective of the producer

and of the storage protocol:

| operation | Producer’s perspective | Storage protocol’s perspective |

|---|---|---|

produce_start | receives a permission for an uninitialized cell | lends out a permission for an uninitialized cell |

| write to the cell | writes to the cell with PCell::put | |

produce_end | returns a permission for the now-initialized cell | receives back the permission, now initialized |

And here is the storage protocol’s perspective, graphically:

Building the tokenized_state_machine!

Now that we finally have a handle on the protocol, let’s implement it. It should have all the following state:

- The value of the shared

headatomic - The value of the shared

tailatomic - The producer’s state

- Is the producer step in progress?

- Local

tailfield saved by the producer

- The consumer’s state

- Is the consumer step in progress?

- Local

headfield saved by the producer

- The IDs of cells in the buffer (so we know what permissions we’re meant to be storing)

- The permissions that are actually stored.

And now, in code:

#[is_variant]

pub enum ProducerState {

Idle(nat), // local copy of tail

Producing(nat),

}

#[is_variant]

pub enum ConsumerState {

Idle(nat), // local copy of head

Consuming(nat),

}

tokenized_state_machine!{FifoQueue<T> {

fields {

// IDs of the cells used in the ring buffer.

// These are fixed throughout the protocol.

#[sharding(constant)]

pub backing_cells: Seq<CellId>,

// All the stored permissions

#[sharding(storage_map)]

pub storage: Map<nat, cell::PointsTo<T>>,

// Represents the shared `head` field

#[sharding(variable)]

pub head: nat,

// Represents the shared `tail` field

#[sharding(variable)]

pub tail: nat,

// Represents the local state of the single-producer

#[sharding(variable)]

pub producer: ProducerState,

// Represents the local state of the single-consumer

#[sharding(variable)]

pub consumer: ConsumerState,

}

// ...

}}As you can see, Verus allows us to utilize storage by declaring it in the sharding strategy.

Here, the strategy we use is storage_map. In the storage_map strategy,

the stored token items are given by the values in the map.

The keys in the map can be an arbitrary type that we choose to use as an index to refer to

stored items. Here, the index we’ll use will just the index into the queue.

Thus keys of the storage map will take on values in the range [0, len).

Hmm. For a second, I thought you were going to use the cell IDs as keys in the map. Could it work that way as well?

Yes, although it would be slightly more complicated. For one, we’d need to track an invariant that all the IDs are distinct, just to show that there aren’t overlapping keys. But that’s an extra invariant to prove that we don’t need if we do it this way. Much easier to declare a correspondance between

backing_cells[i]andstorage[i]for eachi.Wait, does that mean we aren’t going to prove an invariant that all the IDs are distinct? That can’t possibly be right, right?

It does mean that!

Certainly, it is true that in any execution of the program, the IDs of the cells are going to be distinct, but this isn’t something we need to track ourselves as users of the

PCelllibrary.You might be familiar with an approach where we have some kind of “heap model,” which maps addresses to values. When we update one entry, we have to show how nothing else of interest is changing. But like I just said, we aren’t using that sort of heap model in the

Fifoprotocol, we’re just indexing based on queue index. And we don’t rely on any sort of heap model like that in the implementations ofenqueueordequeueeither; there, we use the separated permission model.

Now, let’s dive into the transitions. Let’s start with produce_start transition.

transition!{

produce_start() {

// In order to begin, we have to be in ProducerState::Idle.

require(pre.producer.is_Idle());

// We'll be comparing the producer's _local_ copy of the tail

// with the _shared_ version of the head.

let tail = pre.producer.get_Idle_0();

let head = pre.head;

assert(0 <= tail && tail < pre.backing_cells.len());

// Compute the incremented tail, wrapping around if necessary.

let next_tail = Self::inc_wrap(tail, pre.backing_cells.len());

// We have to check that the buffer isn't full to proceed.

require(next_tail != head);

// Update the producer's local state to be in the `Producing` state.

update producer = ProducerState::Producing(tail);

// Withdraw ("check out") the permission stored at index `tail`.

// This creates a proof obligation for the transition system to prove that

// there is actually a permission stored at this index.

withdraw storage -= [tail => let perm] by {

assert(pre.valid_storage_at_idx(tail));

};

// The transition needs to guarantee to the client that the

// permission they are checking out:

// (i) is for the cell at index `tail` (the IDs match)

// (ii) the permission indicates that the cell is empty

assert(

perm.id() === pre.backing_cells.index(tail as int)

&& perm.is_uninit()

) by {

assert(!pre.in_active_range(tail));

assert(pre.valid_storage_at_idx(tail));

};

}

}It’s a doozy, but we just need to break it down into three parts:

- The enabling conditions (

require).- The client needs to exhibit that these conditions hold

(e.g., by doing the approparite comparison between the

headandtailvalues) in order to perform the transition.

- The client needs to exhibit that these conditions hold

(e.g., by doing the approparite comparison between the

- The stuff that gets updated:

- We

updatethe producer’s local state - We

withdraw(“check out”) the permission, which is represented simply by removing the key from the map.

- We

- Guarantees about the result of the transition.

- These guarantees will follow from internal invariants about the

FifoQueuesystem - In this case, we care about guarantees on the permission token that is checked out.

- These guarantees will follow from internal invariants about the

Now, let’s see produce_end. The main difference, here, is that the client is checking the permission token

back into the system, which means we have to provide the guarantees about the permission token

in the enabing condition rather than in a post-guarantee.

transition!{

// This transition is parameterized by the value of the permission

// being inserted. Since the permission is being deposited

// (coming from "outside" the system) we can't compute it as a

// function of the current state, unlike how we did it for the

// `produce_start` transition).

produce_end(perm: cell::PointsTo<T>) {

// In order to complete the produce step,

// we have to be in ProducerState::Producing.

require(pre.producer.is_Producing());

let tail = pre.producer.get_Producing_0();

assert(0 <= tail && tail < pre.backing_cells.len());

// Compute the incremented tail, wrapping around if necessary.

let next_tail = Self::inc_wrap(tail, pre.backing_cells.len());

// This time, we don't need to compare the `head` and `tail` - we already

// check that, and anyway, we don't have access to the `head` field

// for this transition. (This transition is supposed to occur while

// modifying the `tail` field, so we can't do both.)

// However, we _do_ need to check that the permission token being

// checked in satisfies its requirements. It has to be associated

// with the correct cell, and it has to be full.

require(perm.id() === pre.backing_cells.index(tail as int)

&& perm.is_init());

// Perform our updates. Update the tail to the computed value,

// both the shared version and the producer's local copy.

// Also, move back to the Idle state.

update producer = ProducerState::Idle(next_tail);

update tail = next_tail;

// Check the permission back into the storage map.

deposit storage += [tail => perm] by { assert(pre.valid_storage_at_idx(tail)); };

}

}Check the full source for the consume_start and consume_end transitions, which are pretty similar,

and for the invariants we use to prove that the transitions are all well-formed.

Verified Implementation

For the implementation, let’s start with the definitions for the Producer, Consumer, and Queue structs,

which are based on the ones from the unverified implementation, augmented with proof variables.

The Producer, for example, gets a proof token for the producer: ProducerState field.

The well-formedness condition here demands us to be in the ProducerState::Idle state

(in every call to enqueue, we must start and end in the Idle state).

pub struct Producer<T> {

queue: Arc<Queue<T>>,

tail: usize,

producer: Tracked<FifoQueue::producer<T>>,

}

impl<T> Producer<T> {

pub closed spec fn wf(&self) -> bool {

(*self.queue).wf()

&& self.producer@.instance_id() == (*self.queue).instance@.id()

&& self.producer@.value() == ProducerState::Idle(self.tail as nat)

&& (self.tail as int) < (*self.queue).buffer@.len()

}

}For the Queue type itself, we add an atomic invariant for the head and tail fields:

struct_with_invariants!{

struct Queue<T> {

buffer: Vec<PCell<T>>,

head: AtomicU64<_, FifoQueue::head<T>, _>,

tail: AtomicU64<_, FifoQueue::tail<T>, _>,

instance: Tracked<FifoQueue::Instance<T>>,

}

pub closed spec fn wf(&self) -> bool {

predicate {

// The Cell IDs in the instance protocol match the cell IDs in the actual vector:

&&& self.instance@.backing_cells().len() == self.buffer@.len()

&&& forall|i: int| 0 <= i && i < self.buffer@.len() as int ==>

self.instance@.backing_cells().index(i) ===

self.buffer@.index(i).id()

}

invariant on head with (instance) is (v: u64, g: FifoQueue::head<T>) {

&&& g.instance_id() === instance@.id()

&&& g.value() == v as int

}

invariant on tail with (instance) is (v: u64, g: FifoQueue::tail<T>) {

&&& g.instance_id() === instance@.id()

&&& g.value() == v as int

}

}

}Now we can implement and verify enqueue:

impl<T> Producer<T> {

fn enqueue(&mut self, t: T)

requires

old(self).wf(),

ensures