Verus overview

Verus is a tool for verifying the correctness of code written in Rust. The main goal is to verify full functional correctness of low-level systems code, building on ideas from existing verification frameworks like Dafny, Boogie, F*, VCC, Prusti, Creusot, Aeneas, Cogent, Rocq, and Isabelle/HOL. Verification is static: Verus adds no run-time checks, but instead uses computer-aided theorem proving to statically verify that executable Rust code will always satisfy some user-provided specifications for all possible executions of the code.

In more detail, Verus aims to:

- provide a pure mathematical language for expressing specifications (like Dafny, Creusot, F*, Coq, Isabelle/HOL)

- provide a mathematical language for expressing proofs (like Dafny, F*, Coq, Isabelle/HOL) based exclusively on classical logic (like Dafny)

- provide a low-level, imperative language for expressing executable code (like VCC), based on Rust (like Prusti, Creusot, and Aeneas)

- generate small, simple verification conditions that an SMT solver

like Z3 can solve efficiently,

based on the following principles:

- keep the mathematical specification language close to the SMT solver’s mathematical language (like Boogie)

- use lightweight linear type checking, rather than SMT solving, to reason about memory and aliasing (like Cogent, Creusot, Aeneas, and linear Dafny)

We believe that Rust is a good language for achieving these goals. Rust combines low-level data manipulation, including manual memory management, with an advanced, high-level, safe type system. The type system includes features commonly found in higher-level verification languages, including algebraic datatypes (with pattern matching), type classes, and first-class functions. This makes it easy to express specifications and proofs in a natural way. More importantly, Rust’s type system includes sophisticated support for linear types and borrowing, which takes care of much of the reasoning about memory and aliasing. As a result, the remaining reasoning can ignore most memory and aliasing issues, and treat the Rust code as if it were code written in a purely functional language, which makes verification easier.

At present, we do not intend to:

- support all Rust features and libraries (instead, we will focus a high-value features and libraries needed to support our users)

- verify the verifier itself

- verify the Rust/LLVM compilers

This guide

This guide assumes that you’re already somewhat familiar with the basics of Rust programming. (If you’re not, we recommend spending a couple hours on the Learn Rust page.) Familiarity with Rust is useful for Verus, because Verus builds on Rust’s syntax and Rust’s type system to express specifications, proofs, and executable code. In fact, there is no separate language for specifications and proofs; instead, specifications and proofs are written in Rust syntax and type-checked with Rust’s type checker. So if you already know Rust, you’ll have an easier time getting started with Verus.

Nevertheless, verifying the correctness of Rust code requires concepts and techniques

beyond just writing ordinary executable Rust code.

For example, Verus extends Rust’s syntax (via macros) with new concepts for

writing specifications and proofs, such as forall, exists, requires, and ensures,

as well as introducing new types, like the mathematical integer types int and nat.

It can be challenging to prove that a Rust function satisfies its postconditions (its ensures clauses)

or that a call to a function satisfies the function’s preconditions (its requires clauses).

Therefore, this guide’s tutorial will walk you through the various concepts and techniques,

starting with relatively simple concepts (basic proofs about integers),

moving on to more moderately difficult challenges (inductive proofs about data structures),

and then on to more advanced topics such as proofs about arrays using forall and exists

and proofs about concurrent code.

All of these proofs are aided by an automated theorem prover

(specifically, Z3,

a satisfiability-modulo-theories solver, or “SMT solver” for short).

The SMT solver will often be able to prove simple properties,

such as basic properties about booleans or integer arithmetic,

with no additional help from the programmer.

However, more complex proofs often require effort from both the programmer and the SMT solver.

Therefore, this guide will also help you understand the strengths and limitations of SMT solving,

and give advice on how to fill in the parts of proofs that SMT solvers cannot handle automatically.

(For example, SMT solvers usually cannot automatically perform proofs by induction,

but you can write a proof by induction simply by writing a recursive Rust function whose ensures

clause expresses the induction hypothesis.)

Getting Started

In this chapter, we’ll walk you through setting up Verus and running it on a sample program. You can either:

If you don’t want to install Verus yet, but just want to experiment with it or follow along the tutorial, you can also run Verus through the Verus playground in your browser.

Getting started on the command line

1. Install Verus.

To install Verus, follow the instructions at INSTALL.md.

2. Verify a sample program.

Create a file called getting_started.rs, and paste in the following contents:

use vstd::prelude::*;

verus! {

spec fn min(x: int, y: int) -> int {

if x <= y {

x

} else {

y

}

}

fn main() {

assert(min(10, 20) == 10);

assert(min(-10, -20) == -20);

assert(forall|i: int, j: int| min(i, j) <= i && min(i, j) <= j);

assert(forall|i: int, j: int| min(i, j) == i || min(i, j) == j);

assert(forall|i: int, j: int| min(i, j) == min(j, i));

}

} // verus!To run Verus on the file:

If on macOS, Linux, or similar system, run:

/path/to/verus getting_started.rs

If on Windows, run:

.\path\to\verus.exe getting_started.rs

You should see the following output:

note: verifying root module

verification results:: 1 verified, 0 errors

This indicates that Verus successfully verified 1 function (the main function).

Try it on code that won’t verify

If you want, you can try editing the getting_started.rs file

to see a verification failure.

For example, if you add the following line to main:

assert(forall|i: int, j: int| min(i, j) == min(i, i));

you will see an error message:

note: verifying root module

error: assertion failed

--> getting_started.rs:19:12

|

19 | assert(forall|i: int, j: int| min(i, j) == min(i, i));

| ^^^^^^ assertion failed

error: aborting due to previous error

verification results:: 0 verified, 1 errors

3. Compile the program

The command above only verifies the code, but does not compile it. If you also want to compile

it to a binary, you can verus with the --compile flag.

If on macOS, Linux, or similar system, run:

/path/to/verus getting_started.rs --compile

If on Windows, run:

.\path\to\verus.exe getting_started.rs --compile

Either will create a binary getting_started.

However, in this example, the binary won’t do anything interesting

because the main function contains no executable code —

it contains only statically-checked assertions,

which are erased before compilation.

4. Learn more about Verus

Continue with the tutorial, starting with an explanation of the verus! macro from the above example.

Getting started with VSCode

This page will get you set up using Verus in VSCode using the verus-analyzer extension. Note that verus-analyzer is very experimental.

1. Create a Rust crate

Install cargo if you haven’t yet. Find a scratch directory to use, and run:

cargo init verus_test

This will create the following files:

verus_test/Cargo.tomlverus_test/src/main.rs

2. Install verus-analyzer via VSCode

Open VSCode and install verus-analyzer through the VSCode marketplace.

3. Open your workspace in VSCode.

Go to File > Open Folder and select the verus_test directory.

4. Disable rust-analyzer

If you have rust-analyzer installed, you’ll want to disable it, as it is redundant and (without the proper configuration) will result in additional errors that you won’t want to see.

To disable rust-analyzer, go to the extensions panel, find rust-analyzer, click the gear icon, and select “Disable (Workspace)”. (This will disable rust-analyzer only for the current workspace.) Then click the blue “Restart Extensions” button that appears.

5. Test that Verus is working.

Within your verus_test project, navigate to the src/main.rs file. Paste in the following:

use vstd::prelude::*;

verus! {

spec fn min(x: int, y: int) -> int {

if x <= y {

x

} else {

y

}

}

fn main() {

assert(min(10, 20) == 10);

assert(min(-10, -20) == -20);

assert(forall|i: int, j: int| min(i, j) <= i && min(i, j) <= j);

assert(forall|i: int, j: int| min(i, j) == i || min(i, j) == j);

assert(forall|i: int, j: int| min(i, j) == min(j, i));

assert(forall|i: int, j: int| min(i, j) == min(i, i));

}

} // verus!Save the file in order to trigger verus-analyzer.

This program has an error which Verus should detect.

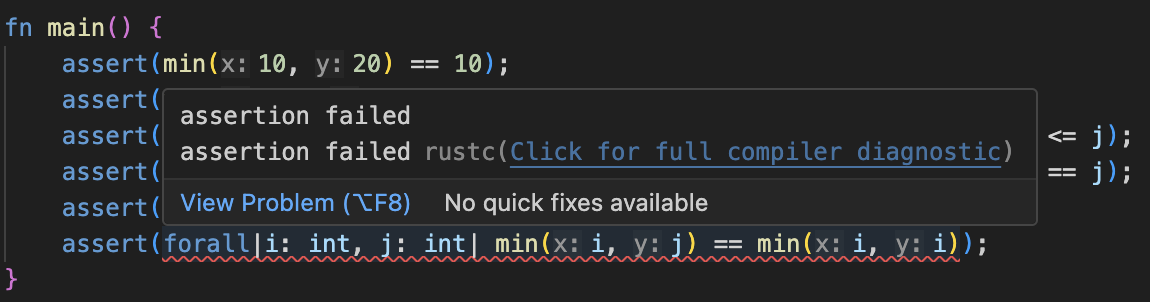

If everything is working correctly, you should see an error from Verus on the final assert line:

If you click the link, “Click for full compiler diagnostic”, you should see an error like:

error: assertion failed

--> verus_test/src/main.rs:19:12

|

19 | assert(forall|i: int, j: int| min(i, j) == min(i, i));

| ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ assertion failed

Delete this line, and now Verus should say that the file verifies successfully.

6. Learn more about the verus-analyzer extension.

See the verus-analyzer README for more information and tips on using verus-analyzer.

7. Learn more about Verus

Continue with the tutorial, starting with an explanation of the verus! macro from the above example.

The verus! macro

Recall the sample program from the Getting Started chapters:

use vstd::prelude::*;

verus! {

spec fn min(x: int, y: int) -> int {

if x <= y {

x

} else {

y

}

}

fn main() {

assert(min(10, 20) == 10);

assert(min(-10, -20) == -20);

assert(forall|i: int, j: int| min(i, j) <= i && min(i, j) <= j);

assert(forall|i: int, j: int| min(i, j) == i || min(i, j) == j);

assert(forall|i: int, j: int| min(i, j) == min(j, i));

}

} // verus!What is this exactly? Is it Verus? Is it Rust?

It’s both! It’s a Rust source file that uses the verus! macro to embed Verus.

Specifically, the verus! macro extends Rust’s syntax with verification-related features

such as preconditions, postconditions, assertions, forall, exists, etc.,

which we will learn more about in this tutorial.

Verus uses a macro named verus! to extend Rust’s syntax with verification-related features

such as preconditions, postconditions, assertions, forall, exists, etc.

Therefore, each file in a crate will typically take the following form:

use vstd::prelude::*;

verus! {

// ...

}The vstd::prelude exports the verus! macro along with some other Verus utilities.

The verus! macro, besides extending Rust’s syntax, also

tells Verus to verify the functions contained within.

By default, Verus verifies everything inside the verus! macro and ignores anything

defined outside the verus! macro. There are various attributes and directives to modify

this behavior (e.g., see this chapter), but for

most of the tutorial, we will consider all code to be Verus code that must be in the

verus! macro.

Note for the tutorial.

In the remainder of this guide, we will omit these declarations from the examples to avoid clutter.

However, remember that any example code should be placed inside the verus! { ... } block,

and that the file should contain use vstd::prelude::*;.

Alternate syntax.

Verus also supports an alternate, attribute-based syntax.

This syntax may be helpful when you want to minimize changes to an existing Rust project.

However, because the verus! syntax is cleaner and simpler, we’ll stick to that in this

tutorial.

Basic specifications

Verus programs contain specifications to describe the intended behavior of the code. These specifications include preconditions, postconditions, assertions, and loop invariants. Specifications are one form of ghost code — code that appears in the Rust source code for verification’s sake, but does not appear in the compiled executable.

This chapter will walk through some basic examples of preconditions, postconditions, and assertions, showing the syntax for writing these specifications and discussing integer arithmetic and equality in specifications.

Preconditions (requires clauses)

Let’s start with a small example.

Suppose we want to verify a function octuple that multiplies a number by 8:

fn octuple(x1: i8) -> i8 {

let x2 = x1 + x1;

let x4 = x2 + x2;

x4 + x4

}If we ask Verus to verify this code, Verus immediately reports errors about octuple:

error: possible arithmetic underflow/overflow

|

| let x2 = x1 + x1;

| ^^^^^^^

Here, Verus cannot prove that the result of x1 + x1 fits in an 8-bit i8 value,

which allows values in the range -128…127.

If x1 were 100, for example, x1 + x1 would be 200, which is larger than 127.

We need to make sure that when octuple is called, the argument x1 is not too large.

We can do this by adding preconditions (also known as “requires clauses”)

to octuple specifying which values for x1 are allowed.

In Verus, preconditions are written with a requires followed by zero or more boolean expressions

separated by commas:

fn octuple(x1: i8) -> i8

requires

-64 <= x1,

x1 < 64,

{

let x2 = x1 + x1;

let x4 = x2 + x2;

x4 + x4

}The two preconditions above say that x1 must be at least -64 and less than 64,

so that x1 + x1 will fit in the range -128…127.

This fixes the error about x1 + x1, but we still get an error about x2 + x2:

error: possible arithmetic underflow/overflow

|

| let x4 = x2 + x2;

| ^^^^^^^

If we want x1 + x1, x2 + x2, and x4 + x4 to all succeed, we need a tighter bound on x1:

fn octuple(x1: i8) -> i8

requires

-16 <= x1,

x1 < 16,

{

let x2 = x1 + x1;

let x4 = x2 + x2;

x4 + x4

}This time, verification is successful.

Now suppose we try to call octuple with a value that does not satisfy octuple’s precondition:

fn main() {

let n = octuple(20);

}For this call, Verus reports an error, since 20 is not less than 16:

error: precondition not satisfied

|

| x1 < 16,

| ------- failed precondition

...

| let n = octuple(20);

| ^^^^^^^^^^^

If we pass 10 instead of 20, verification succeeds:

fn main() {

let n = octuple(10);

}Postconditions (ensures clauses)

Suppose we want to verify properties about the value returned from octuple.

For example, we might want to assert that the value returned from octuple

is 8 times as large as the argument passed to octuple.

Let’s try putting an assertion in main that the result of calling octuple(10) is 80:

fn main() {

let n = octuple(10);

assert(n == 80);

}Although octuple(10) really does return 80,

Verus nevertheless reports an error:

error: assertion failed

|

| assert(n == 80);

| ^^^^^^^ assertion failed

The error occurs because, even though octuple multiplies its argument by 8,

octuple doesn’t publicize this fact to the other functions in the program.

To do this, we can add postconditions (ensures clauses) to octuple specifying

some properties of octuple’s return value:

fn octuple(x1: i8) -> (x8: i8)

requires

-16 <= x1,

x1 < 16,

ensures

x8 == 8 * x1,

{

let x2 = x1 + x1;

let x4 = x2 + x2;

x4 + x4

}To write a property about the return value, we need to give a name to the return value.

The Verus syntax for this is -> (name: return_type). In the example above,

saying -> (x8: i8) allows the postcondition x8 == 8 * x1 to use the name x8

for octuple’s return value.

Preconditions and postconditions establish a modular verification protocol between functions.

When main calls octuple, Verus checks that the arguments in the call satisfy octuple’s

preconditions.

When Verus verifies the body of the octuple function,

it can assume that the preconditions are satisfied,

without having to know anything about the exact arguments passed in by main.

Likewise, when Verus verifies the body of the main function,

it can assume that octuple satisfies its postconditions,

without having to know anything about the body of octuple.

In this way, Verus can verify each function independently.

This modular verification approach breaks verification into small, manageable pieces,

which makes verification more efficient than if Verus tried to verify

all of a program’s functions together simultaneously.

Nevertheless, writing preconditions and postconditions requires significant programmer effort —

if you want to verify a large program with a lot of functions,

you’ll probably spend substantial time writing preconditions and postconditions for the functions.

assert and assume

While requires and ensures connect functions together,

assert makes a local, private request to the SMT solver to prove a certain fact.

(Note: assert(...) should not be confused with the Rust assert!(...) macro —

the former is statically checked using the SMT solver, while the latter is checked at run-time.)

assert has an evil twin named assume, which asks the SMT solver to

simply accept some boolean expression as a fact without proof.

While assert is harmless and won’t cause any unsoundness in a proof,

assume can easily enable a “proof” of a fact that isn’t true.

In fact, by writing assume(false), you can prove anything you want:

assume(false);

assert(2 + 2 == 5); // succeedsVerus programmers often use assert and assume to help develop and debug proofs.

They may add temporary asserts to determine which facts the SMT solver can prove

and which it can’t,

and they may add temporary assumes to see which additional assumptions are necessary

for the SMT solver to complete a proof,

or as a placeholder for parts of the proof that haven’t yet been written.

As the proof evolves, the programmer replaces assumes with asserts,

and may eventually remove the asserts.

A complete proof may contain asserts, but should not contain any assumes.

(In some situations, assert can help the SMT solver complete a proof,

by giving the SMT hints about how to manipulate forall and exists expressions.

There are also special forms of assert, such as assert(...) by(bit_vector),

to help prove properties about bit vectors, nonlinear integer arithmetic,

forall expressions, etc.)

Executable code and ghost code

Let’s put everything from this section together into a final version of our example program:

use vstd::prelude::*;

verus! {

#[verifier::external_body]

fn print_two_digit_number(i: i8)

requires

-99 <= i < 100,

{

println!("The answer is {}", i);

}

fn octuple(x1: i8) -> (x8: i8)

requires

-16 <= x1 < 16,

ensures

x8 == 8 * x1,

{

let x2 = x1 + x1;

let x4 = x2 + x2;

x4 + x4

}

fn main() {

let n = octuple(10);

assert(n == 80);

print_two_digit_number(n);

}

} // verus!Here, we’ve made a few final adjustments.

First, we’ve combined the two preconditions -16 <= x1 and x1 < 16

into a single preconditon -16 <= x1 < 16,

since Verus lets us chain multiple inequalities together in a single expression

(equivalently, we could have also written -16 <= x1 && x1 < 16).

Second, we’ve added a function print_two_digit_number to print the result of octuple.

Unlike main and octuple, we ask Verus not to verify print_two_digit_number.

We do this by marking it #[verifier::external_body],

so that Verus pays attention to the function’s preconditions and postconditions but ignores

the function’s body.

This is common in projects using Verus:

you may want to verify some of it (perhaps the program’s core algorithms),

but leave other aspects, such as input-output operations, unverified.

More generally, since verifying all the software in the world is still infeasible,

there will be some boundary between verified code and unverified code,

and #[verifier::external_body] can be used to mark this boundary.

We can now compile the program above using the --compile option to Verus:

./target-verus/release/verus --compile ../examples/guide/requires_ensures.rs

This will produce an executable that prints a message when run:

The answer is 80

Note that the generated executable does not contain the requires, ensures, and assert code,

since these are only needed during static verification,

not during run-time execution.

We refer to requires, ensures, assert, and assume as ghost code,

in contast to the executable code that actually gets compiled.

Verus erases all ghost code before compilation so that it imposes no run-time overhead.

Expressions and operators for specifications

Verus extends Rust’s syntax with additional operators and expressions useful for writing specifications. For example:

forall|i: int, j: int| 0 <= i <= j < len ==> f(i, j)This snippet illustrates:

- the

forallquantifier, which we will cover later - chained operators

- implication operators

Here, we’ll discuss the last two, along with Verus notation for conjunction, disjunction, and field access.

Chained inequalities

Specifications can chain together multiple <=, <, >=, and > operations.

For example,

0 <= i <= j < len has the same meaning as 0 <= i && i <= j && j < len.

Logical implication

To make specifications more readable, Verus supports an implication operator ==>.

The expression a ==> b (pronounced “a implies b”) is logically equivalent to !a || b.

As an example, the expression

forall|i: int, j: int| 0 <= i <= j < len ==> f(i, j)

means that for every pair i and j such that 0 <= i <= j < len, f(i, j) is true.

Note that ==> has lower precedence that most other boolean operations.

For example, a ==> b && c means a ==> (b && c).

Verus also supports two-way implication for booleans (<==>) with even lower precedence,

so that a <==> b && c is equivalent to a == (b && c).

See the reference for a full description of precedence

in Verus.

Conjunction and disjunction

Because &&, ||, and ==> are so common in Verus specifications, it is often desirable to have

low precedence versions of && and ||. Verus also supports “triple-and” (&&&) and

“triple-or” (|||) which are equivalent to && and || except for their precedence.

Implication ==> and equivalence <==> bind more tightly than either &&& or |||.

&&& and ||| are also convenient for the “bulleted list” form:

&&& a ==> b

&&& c

&&& d <==> e && f

This has the same meaning as (a ==> b) && c && (d <==> (e && f)).

Accessing fields of a struct or enum

Verus has ->, is, and matches syntax for accessing fields

of structs

and matching variants of enums.

Integer types

Rust supports various fixed-bit-width integer types:

i8,i16,i32,i64,i128,isizeu8,u16,u32,u64,u128,usize

To these, Verus adds two more integer types to represent arbitrarily large integers in specifications:

- int

- nat

The type int is the most fundamental type for reasoning about integer arithmetic in Verus.

It represents all mathematical integers,

both positive and negative.

The SMT solver contains direct support for reasoning about values of type int.

Internally, Verus uses int to represent the other integer types,

adding mathematical constraints to limit the range of the integers.

For example, a value of the type nat of natural numbers

is a mathematical integer constrained to be greater than or equal to 0.

Rust’s fixed-bit-width integer types have both a lower and upper bound;

a u8 value is an integer constrained to be greater than or equal to 0 and less than 256:

fn test_u8(u: u8) {

assert(0 <= u < 256);

}The bounds of usize and isize are platform dependent.

By default, Verus assumes that these types may be either 32 bits or 64 bits wide,

but this assumption may be configured.

Verus recognizes the constants

usize::BITS,

usize::MAX,

isize::MAX,

and

isize::MIN,

which are useful for reasoning symbolically

about the usize integer range.

Using integer types in specifications

Since there are 14 different integer types (counting int, nat, u8…usize, and i8…isize),

it’s not always obvious which type to use when writing a specification.

Our advice is to be as general as possible by default:

- Use

intby default, since this is the most general type and is supported most efficiently by the SMT solver.- Example: the Verus sequence library

uses

intfor most operations, such as indexing into a sequence. - Note: as discussed below, most arithmetic operations in specifications produce values of type

int, so it is usually most convenient to write specifications in terms ofint.

- Example: the Verus sequence library

uses

- Use

natfor return values and datatype fields where the 0 lower bound is likely to provide useful information, such as lengths of sequences.- Example: the Verus

Seq::len()function returns anatto represent the length of a sequence. - The type

natis also handy for proving that recursive definitions terminate; you might to define a recursivefactorialfunction to take a parameter of typenat, if you don’t want to provide a definition offactorialfor negative integers.

- Example: the Verus

- Use fixed-width integer types for fixed-with values such as bytes.

- Example: the bytes of a network packet can be represented with type

Seq<u8>, an arbitrary-length sequence of 8-bit values.

- Example: the bytes of a network packet can be represented with type

Note that int and nat are usable only in ghost code;

they cannot be compiled to executable code.

For example, the following will not work:

fn main() {

let i: int = 5; // FAILS: executable variable `i` cannot have type `int`, which is ghost-only

}Integer constants

As in ordinary Rust, integer constants in Verus can include their type as a suffix

(e.g. 7u8 or 7u32 or 7int) to precisely specify the type of the constant:

fn test_consts() {

let u: u8 = 1u8;

assert({

let i: int = 2int;

let n: nat = 3nat;

0int <= u < i < n < 4int

});

}Usually, but not always, Verus and Rust will be able to infer types for integer constants, so that you can omit the suffixes unless the Rust type checker complains about not being able to infer the type:

fn test_consts_infer() {

let u: u8 = 1;

assert({

let i: int = 2;

let n: nat = 3;

0 <= u < i < n < 4

});

}Note that the values 0, u, i, n, and 4 in the expression 0 <= u < i < n < 4

are allowed to all have different types —

you can use <=, <, >=, >, ==, and != to compare values of different integer types inside ghost code

(e.g. comparing a u8 to an int in u < i).

Constants with the suffix int and nat can be arbitrarily large:

fn test_consts_large() {

assert({

let i: int = 0x10000000000000000000000000000000000000000000000000000000000000000int;

let j: int = i + i;

j == 2 * i

});

}Integer coercions using “as”

As in ordinary rust, the as operator coerces one integer type to another.

In ghost code, you can use as int or as nat to coerce to int or nat:

fn test_coerce() {

let u: u8 = 1;

assert({

let i: int = u as int;

let n: nat = u as nat;

u == i && u == n

});

}You can use as to coerce a value v to a type t even if v is too small or too large to fit in t.

However, if the value v is outside the bounds of type t,

then the expression v as t will produce some arbitrary value of type t:

fn test_coerce_fail() {

let v: u16 = 257;

let u: u8 = v as u8;

assert(u == v); // FAILS, because u has type u8 and therefore cannot be equal to 257

}This produces an error for the assertion, along with a hint that the value in the as coercion might have been out of range:

error: assertion failed

|

| assert(u == v); // FAILS, because u has type u8 and therefore cannot be equal to 257

| ^^^^^^ assertion failed

note: recommendation not met: value may be out of range of the target type (use `#[verifier::truncate]` on the cast to silence this warning)

|

| let u: u8 = v as u8;

| ^

See the reference for more on how Verus defines as-truncation and how to reason about it.

Integer arithmetic

Integer arithmetic behaves a bit differently in ghost code than in executable code.

In executable code, we frequently have to reason about integer overflow, and in fact, Verus requires us to prove the absence of overflow. The following operation fails because the arithmetic might produce an operation greater than 255:

fn test_sum(x: u8, y: u8) {

let sum1: u8 = x + y; // FAILS: possible overflow

}error: possible arithmetic underflow/overflow

|

| let sum1: u8 = x + y; // FAILS: possible overflow

| ^^^^^

In ghost code, however,

common arithmetic operations

(+, -, *, /, %) never overflow or wrap.

To make this possible, Verus widens the results of many operations;

for example, adding two u8 values is widened to type int.

fn test_sum2(x: u8, y: u8) {

assert({

let sum2: int = x + y; // in ghost code, + returns int and does not overflow

0 <= sum2 < 511

});

}Since + does not overflow in ghost code, we can easily write specifications about overflow.

For example, to make sure that the executable x + y doesn’t overflow,

we simply write requires x + y < 256, relying on the fact that x + y is widened to type int

in the requires clause:

fn test_sum3(x: u8, y: u8)

requires

x + y < 256, // make sure "let sum1: u8 = x + y" can't overflow

{

let sum1: u8 = x + y; // succeeds

}Also note that the inputs need not have the same type; you can add, subtract, or multiply one integer type with another:

fn test_sum_mixed(x: u8, y: u16) {

assert(x + y >= y); // x + y has type int, so the assertion succeeds

assert(x - y <= x); // x - y has type int, so the assertion succeeds

}In general in ghost code,

Verus widens native Rust integer types to int for operators like +, -, and * that might overflow;

the reference page describes the widening rules in more detail.

Here are some more tips to keep in mind:

- In ghost code,

/and%compute Euclidean division and remainder, rather than Rust’s truncating division and remainder, when operating on negative left-hand sides or negative right-hand sides. - Division-by-0 and mod-by-0 are errors in executable code and are unspecified in ghost code (see Ghost code vs. exec code for more detail).

- The named arithmetic functions,

add(x, y),sub(x, y), andmul(x, y), do not perform widening, and thus have truncating behavior, even in ghost code. Verus also recognizes some Rust functions likewrapped_addandchecked_add, which may be used in either executable or ghost code.

Equality

Equality behaves differently in ghost code than in executable code.

In executable code, Rust defines == to mean a call to the eq function of the PartialEq trait:

fn equal1(x: u8, y: u8) {

let eq1 = x == y; // means x.eq(y) in Rust

let eq2 = y == x; // means y.eq(x) in Rust

assert(eq1 ==> eq2); // succeeds

}For built-in integer types like u8, the x.eq(y) function is defined as we’d expect,

returning true if x and y hold the same integers.

For user-defined types, though, eq could have other behaviors:

it might have side effects, behave nondeterministically,

or fail to fulfill its promise to implement an

equivalence relation,

even if the type implements the Rust Eq trait:

fn equal2<A: Eq>(x: A, y: A) {

let eq1 = x == y; // means x.eq(y) in Rust

let eq2 = y == x; // means y.eq(x) in Rust

assert(eq1 ==> eq2); // won't work; we can't be sure that A is an equivalence relation

}In ghost code, by contrast, the == operator is always an equivalence relation

(i.e. it is reflexive, symmetric, and transitive):

fn equal3(x: u8, y: u8) {

assert({

let eq1 = x == y;

let eq2 = y == x;

eq1 ==> eq2

});

}Verus defines == in ghost code to be true when:

- for two integers or booleans, the values are equal

- for two structs or enums, the types are the same and the fields are equal

- for two

&references, two Box values, two Rc values, or two Arc values, the pointed-to values are the same - for two RefCell values or two Cell values, the pointers to the interior data are equal (not the interior contents)

In addition, collection dataypes such as Seq<T>, Set<T>, and Map<Key, Value>

have their own definitions of ==,

where two sequences, two sets, or two maps are equal if their elements are equal.

As explained more in specification libraries and extensional equality,

these sometimes require the “extensional equality” operator =~= to help prove equality

between two sequences, two sets, or two maps.

Specification code, proof code, executable code

Verus classifies code into three modes: spec, proof, and exec,

where:

speccode describes properties about programsproofcode proves that programs satisfy propertiesexeccode is ordinary Rust code that can be compiled and run

Both spec code and proof code are forms of ghost code,

so we can organize the three modes in a hierarchy:

- code

- ghost code

speccodeproofcode

execcode

- ghost code

Every function in Verus is either a spec function, a proof function, or an exec function:

spec fn f1(x: int) -> int {

x / 2

}

proof fn f2(x: int) -> int {

x / 2

}

// "exec" is optional, and is usually omitted

exec fn f3(x: u64) -> u64 {

x / 2

}

exec is the default function annotation, so it is usually omitted:

fn f3(x: u64) -> u64 { x / 2 } // exec functionThe rest of this chapter will discuss these three modes in more detail. As you read, you can keep in mind the following relationships between the three modes:

| spec code | proof code | exec code | |

|---|---|---|---|

can contain spec code, call spec functions | yes | yes | yes |

can contain proof code, call proof functions | no | yes | yes |

can contain exec code, call exec functions | no | no | yes |

spec functions

Let’s start with a simple spec function that computes the minimum of two integers:

spec fn min(x: int, y: int) -> int {

if x <= y {

x

} else {

y

}

}

fn test() {

assert(min(10, 20) == 10); // succeeds

assert(min(100, 200) == 100); // succeeds

}Unlike exec functions,

the bodies of spec functions are visible to other functions in the same module,

so the test function can see inside the min function,

which allows the assertions in test to succeed.

Across modules, the bodies of spec functions can be made public to other modules

or kept private to the current module.

The body is public if the function is marked open,

allowing assertions about the function’s body to succeed in other modules:

mod M1 {

use verus_builtin::*;

pub open spec fn min(x: int, y: int) -> int {

if x <= y {

x

} else {

y

}

}

}

mod M2 {

use verus_builtin::*;

use crate::M1::*;

fn test() {

assert(min(10, 20) == 10); // succeeds

}

}By contrast, if the function is marked closed,

then other modules cannot see the function’s body,

even if they can see the function’s declaration. However,

functions within the same module can view a closed spec fn’s body.

In other words, pub makes the declaration public,

while open and closed make the body public or private.

All pub spec functions must be marked either open or closed;

Verus will complain if the function lacks this annotation.

mod M1 {

use verus_builtin::*;

pub closed spec fn min(x: int, y: int) -> int {

if x <= y {

x

} else {

y

}

}

pub proof fn lemma_min(x: int, y: int)

ensures

min(x,y) <= x && min(x,y) <= y,

{}

}

mod M2 {

use verus_builtin::*;

use crate::M1::*;

fn test() {

assert(min(10, 20) == min(10, 20)); // succeeds

assert(min(10, 20) == 10); // FAILS

proof {

lemma_min(10,20);

}

assert(min(10, 20) <= 10); // succeeds

}

}In the example above with min being closed,

the module M2 can still talk about the function min,

proving, for example, that min(10, 20) equals itself

(because everything equals itself, regardless of what’s in it’s body).

On the other hand, the assertion that min(10, 20) == 10 fails,

because M2 cannot see min’s body and therefore doesn’t know that min

computes the minimum of two numbers:

error: assertion failed

|

| assert(min(10, 20) == 10); // FAILS

| ^^^^^^^^^^^^^^^^^ assertion failed

After the call to lemma_min, the assertion that min(10, 20) <= 10 succeeds because lemma_min exposes min(x,y) <= x as a post-condition. lemma_min can prove because this postcondition because it can see the body of min despite min being closed, as lemma_min and min are in the same module.

You can think of pub open spec functions as defining abbreviations

and pub closed spec functions as defining abstractions.

Both can be useful, depending on the situation.

spec functions may be called from other spec functions

and from specifications inside exec functions,

such as preconditions and postconditions.

For example, we can define the minimum of three numbers, min3,

in terms of the mininum of two numbers.

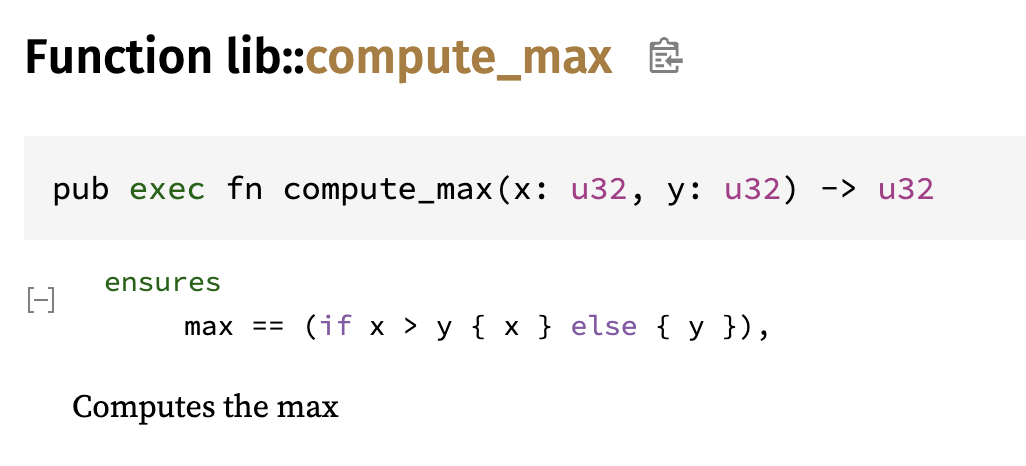

We can then define an exec function, compute_min3,

that uses imperative code with mutable updates to compute

the minimum of 3 numbers,

and defines its postcondition in terms of the spec function min3:

spec fn min(x: int, y: int) -> int {

if x <= y {

x

} else {

y

}

}

spec fn min3(x: int, y: int, z: int) -> int {

min(x, min(y, z))

}

fn compute_min3(x: u64, y: u64, z: u64) -> (m: u64)

ensures

m == min3(x as int, y as int, z as int),

{

let mut m = x;

if y < m {

m = y;

}

if z < m {

m = z;

}

m

}

fn test() {

let m = compute_min3(10, 20, 30);

assert(m == 10);

}

The difference between min3 and compute_min3 highlights some differences

between spec code and exec code.

While exec code may use imperative language features like mutation,

spec code is restricted to purely functional mathematical code.

On the other hand, spec code is allowed to use int and nat,

while exec code is restricted to compilable types like u64.

proof functions

Consider the pub closed spec min function from the previous section.

This defined an abstract min function without revealing the internal

definition of min to other modules.

However, an abstract function definition is useless unless we can say something about the function.

For this, we can use a proof function.

In general, proof functions will reveal or prove properties about specifications.

In this example, we’ll define a proof function named lemma_min that

reveals properties about min without revealing the exact definition of min.

Specifically, lemma_min reveals that min(x, y) equals either x or y and

is no larger than x and y:

mod M1 {

use verus_builtin::*;

pub closed spec fn min(x: int, y: int) -> int {

if x <= y {

x

} else {

y

}

}

pub proof fn lemma_min(x: int, y: int)

ensures

min(x, y) <= x,

min(x, y) <= y,

min(x, y) == x || min(x, y) == y,

{

}

}

mod M2 {

use verus_builtin::*;

use crate::M1::*;

proof fn test() {

lemma_min(10, 20);

assert(min(10, 20) == 10); // succeeds

assert(min(100, 200) == 100); // FAILS

}

}Like exec functions, proof functions may have requires and ensures clauses.

Unlike exec functions, proof functions are ghost and are not compiled to executable code.

In the example above, the lemma_min(10, 20) function is used to help the function test in module M2

prove an assertion about min(10, 20), even when M2 cannot see the internal definition of min

because min is closed.

On the other hand, the assertion about min(100, 200) still fails

unless test also calls lemma_min(100, 200).

proof blocks

Ultimately, the purpose of spec functions and proof functions is to help prove

properties about executable code in exec functions.

In fact, exec functions can contain pieces of proof code in proof blocks,

written with proof { ... }.

Just like a proof function contains proof code,

a proof block in an exec function contains proof code

and can use all of the ghost code features that proof functions can use,

such as the int and nat types.

Consider an earlier example that introduced variables inside an assertion:

fn test_consts_infer() {

let u: u8 = 1;

assert({

let i: int = 2;

let n: nat = 3;

0 <= u < i < n < 4

});

}We can write this in a more natural style using a proof block:

fn test_consts_infer() {

let u: u8 = 1;

proof {

let i: int = 2;

let n: nat = 3;

assert(0 <= u < i < n < 4);

}

}

Here, the proof code inside the proof block can create local variables

of type int and nat,

which can then be used in a subsequent assertion.

The entire proof block is ghost code, so all of it, including its local variables,

will be erased before compilation to executable code.

Proof blocks can call proof functions.

In fact, any calls from an exec function to a proof function

must appear inside proof code such as a proof block,

rather than being called directly from the exec function’s exec code.

This helps clarify which code is executable and which code is ghost,

both for the compiler and for programmers reading the code.

In the exec function test shown below,

a proof block is used to call lemma_min,

allowing subsequent assertions about min to succeed.

mod M1 {

use verus_builtin::*;

pub closed spec fn min(x: int, y: int) -> int {

if x <= y {

x

} else {

y

}

}

pub proof fn lemma_min(x: int, y: int)

ensures

min(x, y) <= x,

min(x, y) <= y,

min(x, y) == x || min(x, y) == y,

{

}

}

mod M2 {

use verus_builtin::*;

use crate::M1::*;

fn test() {

proof {

lemma_min(10, 20);

lemma_min(100, 200);

}

assert(min(10, 20) == 10); // succeeds

assert(min(100, 200) == 100); // succeeds

}

}

assert-by

Notice that in the previous example,

the information that test gains about min

is not confined to the proof block,

but instead propagates past the end of the proof block

to help prove the subsequent assertions.

This is often useful,

particularly when the proof block helps

prove preconditions to subsequent calls to exec functions,

which must appear outside the proof block.

However, sometimes we only need to prove information for a specific purpose,

and it clarifies the structure of the code if we limit the scope

of the information gained.

For this reason,

Verus supports assert(...) by { ... } expressions,

which allows proof code inside the by { ... } block whose sole purpose

is to prove the asserted expression in the assert(...).

Any additional information gained in the proof code is limited to the scope of the block

and does not propagate outside the assert(...) by { ... } expression.

In the example below,

the proof code in the block calls both lemma_min(10, 20) and lemma_min(100, 200).

The first call is used to prove min(10, 20) == 10 in the assert(...) by { ... } expression.

Once this is proven, the subsequent assertion assert(min(10, 20) == 10); succeeds.

However, the assertion assert(min(100, 200) == 100); fails,

because the information gained by the lemma_min(100, 200) call

does not propagate outside the block that contains the call.

mod M1 {

use verus_builtin::*;

pub closed spec fn min(x: int, y: int) -> int {

if x <= y {

x

} else {

y

}

}

pub proof fn lemma_min(x: int, y: int)

ensures

min(x, y) <= x,

min(x, y) <= y,

min(x, y) == x || min(x, y) == y,

{

}

}

mod M2 {

use verus_builtin::*;

use crate::M1::*;

fn test() {

assert(min(10, 20) == 10) by {

lemma_min(10, 20);

lemma_min(100, 200);

}

assert(min(10, 20) == 10); // succeeds

assert(min(100, 200) == 100); // FAILS

}

}spec functions vs. proof functions

Now that we’ve seen both spec functions and proof functions,

let’s take a longer look at the differences between them.

We can summarize the differences in the following table

(including exec functions in the table for reference):

| spec function | proof function | exec function | |

|---|---|---|---|

| compiled or ghost | ghost | ghost | compiled |

| code style | purely functional | mutation allowed | mutation allowed |

can call spec functions | yes | yes | yes |

can call proof functions | no | yes | yes |

can call exec functions | no | no | yes |

| body visibility | may be visible | never visible | never visible |

| body | body optional | body mandatory | body mandatory |

| determinism | deterministic | nondeterministic | nondeterministic |

| preconditions/postconditions | recommends | requires/ensures | requires/ensures |

As described in the spec functions section,

spec functions make their bodies visible to other functions in their module

and may optionally make their bodies visible to other modules as well.

spec functions can also omit their bodies entirely:

spec fn f(i: int) -> int;

Such an uninterpreted function can be useful in libraries that define an abstract, uninterpreted function along with trusted axioms about the function.

Determinism

spec functions are deterministic:

given the same arguments, they always return the same result.

Code can take advantage of this determinism even when a function’s body

is not visible.

For example, the assertion x1 == x2 succeeds in the code below,

because both x1 and x2 equal s(10),

and s(10) always produces the same result, because s is a spec function:

mod M1 {

use verus_builtin::*;

pub closed spec fn s(i: int) -> int {

i + 1

}

pub proof fn p(i: int) -> int {

i + 1

}

}

mod M2 {

use verus_builtin::*;

use crate::M1::*;

proof fn test_determinism() {

let s1 = s(10);

let s2 = s(10);

assert(s1 == s2); // succeeds

let p1 = p(10);

let p2 = p(10);

assert(p1 == p2); // FAILS

}

}By contrast, the proof function p is, in principle,

allowed to return different results each time it is called,

so the assertion p1 == p2 fails.

(Nondeterminism is common for exec functions

that perform input-output operations or work with random numbers.

In practice, it would be unusual for a proof function to behave nondeterministically,

but it is allowed.)

recommends

exec functions and proof functions can have requires and ensures clauses.

By contrast, spec functions cannot have requires and ensures clauses.

This is similar to the way Boogie works,

but differs from other systems like Dafny

and F*.

The reason for disallowing requires and ensures is to keep Verus’s specification language

close to the SMT solver’s mathematical language in order to use the SMT solver as efficiently

as possible (see the Verus Overview).

Nevertheless, it’s sometimes useful to have some sort of preconditions on spec functions

to help catch mistakes in specifications early or to catch accidental misuses of spec functions.

Therefore, spec functions may contain recommends clauses

that are similar to requires clauses,

but represent just lightweight recommendations rather than hard requirements.

For example, for the following function,

callers are under no obligation to obey the i > 0 recommendation:

spec fn f(i: nat) -> nat

recommends

i > 0,

{

(i - 1) as nat

}

proof fn test1() {

assert(f(0) == f(0)); // succeeds

}

It’s perfectly legal for test1 to call f(0), and no error or warning will be generated for f

(in fact, Verus will not check the recommendation at all).

However, if there’s a verification error in a function,

Verus will automatically rerun the verification with recommendation checking turned on,

in hopes that any recommendation failures will help diagnose the verification failure.

For example, in the following:

proof fn test2() {

assert(f(0) <= f(1)); // FAILS

}Verus print the failed assertion as an error and then prints the failed recommendation as a note:

error: assertion failed

|

| assert(f(0) <= f(1)); // FAILS

| ^^^^^^^^^^^^ assertion failed

note: recommendation not met

|

| recommends i > 0

| ----- recommendation not met

...

| assert(f(0) <= f(1)); // FAILS

| ^^^^^^^^^^^^

If the note isn’t helpful, programmers are free to ignore it.

By default, Verus does not perform recommends checking on calls from spec functions:

spec fn caller1() -> nat {

f(0) // no note, warning, or error generated

}

However, you can write spec(checked) to request recommends checking,

which will cause Verus to generate warnings for recommends violations:

spec(checked) fn caller2() -> nat {

f(0) // generates a warning because of "(checked)"

}

This is particularly useful for specifications that are part of the “trusted computing base” that describes the interface to external, unverified components.

Ghost code vs. exec code

The purpose of exec code is to manipulate physically real values —

values that exist in physical electronic circuits when a program runs.

The purpose of ghost code, on the other hand,

is merely to talk about the values that exec code manipulates.

In a sense, this gives ghost code supernatural abilities:

ghost code can talk about things that could not be physically implemented at run-time.

We’ve already seen one example of this with the types int and nat,

which can only be used in ghost code.

As another example, ghost code can talk about the result of division by zero:

fn divide_by_zero() {

let x: u8 = 1;

assert(x / 0 == x / 0); // succeeds in ghost code

let y = x / 0; // FAILS in exec code

}This simply reflects the SMT solver’s willingness to reason about the result of division by zero

as an unspecified integer value.

By contrast, Verus reports a verification failure if exec code attempts to divide by zero:

error: possible division by zero

|

| let y = x / 0; // FAILS

| ^^^^^

Two particular abilities of ghost code1 are worth keeping in mind:

- Ghost code can copy values of any type,

even if the type doesn’t implement the Rust

Copytrait. - Ghost code can create a value of any type2, even if the type has no public constructors (e.g. even if the type is struct whose fields are all private to another module).

For example, the following spec functions create and duplicate values of type S,

defined in another module with private fields and without the Copy trait:

mod MA {

// does not implement Copy

// does not allow construction by other modules

pub struct S {

private_field: u8,

}

}

mod MB {

use verus_builtin::*;

use crate::MA::*;

// construct a ghost S

spec fn make_S() -> S;

// duplicate an S

spec fn duplicate_S(s: S) -> (S, S) {

(s, s)

}

}

These operations are not allowed in exec code.

Furthermore, values from ghost code are not allowed to leak into exec code —

what happens in ghost code stays in ghost code.

Any attempt to use a value from ghost code in exec code will result in a compile-time error:

fn test(s: S) {

let pair = duplicate_S(s); // FAILS

}error: cannot call function with mode spec

|

| let pair = duplicate_S(s); // FAILS

| ^^^^^^^^^^^^^^

As an example of ghost code that uses these abilities,

a call to the Verus Seq::index(...) function

can duplicate a value from the sequence, if the index i is within bounds,

and create a value out of thin air if i is out of bounds:

impl<A> Seq<A> {

...

/// Gets the value at the given index `i`.

///

/// If `i` is not in the range `[0, self.len())`, then the resulting value

/// is meaningless and arbitrary.

pub spec fn index(self, i: int) -> A

recommends 0 <= i < self.len();

...

}Producing exec code from spec code (and vice versa)

Thanks to various contributions from the Verus community, Verus can, in some cases, automatically produce an exec function that provably implements a spec function. Conversely, in some cases, it can automatically produce a spec function from an exec function.

-

Variables in

proofcode can opt out of these special abilities using thetrackedannotation, but this is an advanced feature that can be ignored for now. ↩ -

This is true even if the type has no values in

execcode, like the Rust!“never” type (see the “bottom” value in this technical discussion). ↩

const declarations

In Verus, const declarations are treated internally as 0-argument function calls.

Thus just like functions, const declarations can be marked spec, proof, exec,

or left without an explicit mode.

By default, a const without an explicit mode is assigned a dual spec/exec mode.

We’ll go through what each of these modes mean.

spec consts

A spec const is like a spec function with no arguments.

It is always ghost and cannot be used as an exec value.

spec const SPEC_ONE: int = 1;

spec fn spec_add_one(x: int) -> int {

x + SPEC_ONE

}

proof and exec consts

Just as proof and exec functions can have ensures clauses specifying a postcondition,

proof and exec consts can have ensures clauses to tie the declaration to a spec expression.

The syntax follows the syntax of a function definition.

exec const C: u64

ensures

C == 7,

{

7

}

Note here that we can also use assert when defining the const,

and that we can define it using a call to another const function.

spec fn f() -> int {

1

}

const fn e() -> (u: u64)

ensures

u == f(),

{

1

}

exec const E: u64

ensures

E == 2,

{

assert(f() == 1);

1 + e()

}

spec/exec consts

A const without an explicit mode is dual-use:

it is usable as both an exec value and a spec value.

const ONE: u8 = 1;

fn add_one(x: u8) -> (ret: u8)

requires

x < 0xff,

ensures

ret == x + ONE, // use "ONE" in spec code

{

x + ONE // use "ONE" in exec code

}

Therefore, the const definition is restricted to obey the rules

for both exec code and spec code.

For example, as with exec code, its type must be compilable (e.g. u8, not int),

and, as with spec code, it cannot call any exec or proof functions.

const fn foo() -> u64 { 1 }

const C: u64 = foo(); // FAILS with error "cannot call function `foo` with mode exec"Using an exec const in a spec or proof context

Similar to functions, if you want to use an exec const in a spec or proof context,

you can annotate the declaration with [verifier::when_used_as_spec(SPEC_DEF)],

where SPEC_DEF is the name of a spec const or a spec function with no arguments.

use vstd::layout;

global layout usize is size == 8;

spec const SPEC_USIZE_BYTES: usize = layout::size_of_as_usize::<usize>();

#[verifier::when_used_as_spec(SPEC_USIZE_BYTES)]

exec const USIZE_BYTES: usize

ensures

USIZE_BYTES as nat == layout::size_of::<usize>(),

{

8

}

In this example, without the annotation, Verus will give the error “cannot read const with mode exec.”

Moreover, attempting to use the annotation

#[verifier::when_used_as_spec(layout::size_of::<usize>)],

without defining SPEC_USIZE_BYTES separately,

will also result in an error:

when_used_as_spec can only handle the case when two functions or consts have the same signature.

It doesn’t handle using something like ::<usize> to coerce a function to a different signature.

Trouble-shooting overflow errors

Verus may have difficulty proving that a const declaration does not overflow;

using [verifier::when_used_as_spec(SPEC_DEF)]

or [verifier::non_linear] may help.

For example, here [verifier::non_linear] is added to prevent the error

“possible arithmetic underflow/overflow.”

This allows Verus to reason about the (seemingly)

non-linear expression BAR_PLUS_ONE * BAR,

instead of giving up immediately.

See the chapter on non-linear reasoning for more details.

pub const FOO: u8 = 4;

pub const BAR: u8 = FOO;

pub const BAR_PLUS_ONE: u8 = BAR + 1;

#[verifier::nonlinear]

pub const G: u8 = BAR_PLUS_ONE * BAR;

Putting It All Together

To show how spec, proof, and exec code work together, consider the example

below of computing the n-th triangular number.

We’ll cover this example and the features it uses in more detail in Chapter 4,

so for now, let’s focus on the high-level structure of the code.

We use a spec function triangle to mathematically define our specification using natural numbers (nat)

and a recursive description. We then write a more efficient iterative implementation

as the exec function loop_triangle (recall that exec is the default mode for functions).

We connect the correctness of loop_triangle’s return value to our mathematical specification

in loop_triangle’s ensures clause.

However, to successfully verify loop_triangle, we need a few more things. First, in executable

code, we have to worry about the possibility of arithmetic overflow. To keep things simple here,

we add a precondition to loop_triangle saying that the result needs to be less than one million,

which means it will certainly fit into a u32.

We also need to translate the knowledge that the final triangle result fits in a u32

into the knowledge that each individual step of computing the result won’t overflow,

i.e., that computing sum = sum + idx won’t overflow. We can do this by showing

that triangle is monotonic; i.e., if you increase the argument to triangle, the result increases too.

Showing this property requires an inductive proof. We cover inductive proofs

later; the important thing here is that we can do this proof using a proof function

(triangle_is_monotonic). To invoke the results of our proof in our exec implementation,

we assert that the new sum fits, and as

justification, we an invoke our proof with the relevant arguments. At the

call site, Verus will check that the preconditions for triangle_is_monotonic

hold and then assume that the postconditions hold.

Finally, our implementation uses a while loop, which means it requires some loop invariants, which we cover later.

spec fn triangle(n: nat) -> nat

decreases n,

{

if n == 0 {

0

} else {

n + triangle((n - 1) as nat)

}

}

proof fn triangle_is_monotonic(i: nat, j: nat)

ensures

i <= j ==> triangle(i) <= triangle(j),

decreases j,

{

// We prove the statement `i <= j ==> triangle(i) <= triangle(j)`

// by induction on `j`.

if j == 0 {

// The base case (`j == 0`) is trivial since it's only

// necessary to reason about when `i` and `j` are both 0.

// So no proof lines are needed for this case.

}

else {

// In the induction step, we can assume the statement is true

// for `j - 1`. In Verus, we can get that fact into scope with

// a recursive call substituting `j - 1` for `j`.

triangle_is_monotonic(i, (j - 1) as nat);

// Once we know it's true for `j - 1`, the rest of the proof

// is trivial.

}

}

fn loop_triangle(n: u32) -> (sum: u32)

requires

triangle(n as nat) < 0x1_0000_0000,

ensures

sum == triangle(n as nat),

{

let mut sum: u32 = 0;

let mut idx: u32 = 0;

while idx < n

invariant

idx <= n,

sum == triangle(idx as nat),

triangle(n as nat) < 0x1_0000_0000,

decreases n - idx,

{

idx = idx + 1;

assert(sum + idx < 0x1_0000_0000) by {

triangle_is_monotonic(idx as nat, n as nat);

}

sum = sum + idx;

}

sum

}Recursion and loops

Suppose we want to compute the nth triangular number:

triangle(n) = 0 + 1 + 2 + ... + (n - 1) + n

We can express this as a simple recursive funciton:

spec fn triangle(n: nat) -> nat

decreases n,

{

if n == 0 {

0

} else {

n + triangle((n - 1) as nat)

}

}

This chapter discusses how to define and use recursive functions,

including writing decreases clauses and using fuel.

It then explores a series of verified implementations of triangle,

starting with a basic recursive implementation and ending with a while loop.

Recursive functions, decreases, fuel

Recursive functions are functions that call themselves.

In order to ensure soundness, a recursive spec function must terminate on all inputs —

infinite recursive calls aren’t allowed.

To see why termination is important, consider the following nonterminating function definition:

spec fn bogus(i: int) -> int {

bogus(i) + 1 // FAILS, error due to nontermination

}Verus rejects this definition because the recursive call loops infinitely, never terminating.

If Verus accepted the definion, then you could very easily prove false,

because, for example, the definition insists that bogus(3) == bogus(3) + 1,

which implies that 0 == 1, which is false:

proof fn exploit_bogus()

ensures

false,

{

assert(bogus(3) == bogus(3) + 1);

}To help prove termination,

Verus requires that each recursive spec function definition contain a decreases clause:

spec fn triangle(n: nat) -> nat

decreases n,

{

if n == 0 {

0

} else {

n + triangle((n - 1) as nat)

}

}

Each recursive call must decrease the expression in the decreases clause by at least 1.

Furthermore, the call cannot cause the expression to decrease below 0.

With these restrictions, the expression in the decreases clause serves as an upper bound on the

depth of calls that triangle can make to itself, ensuring termination.

While Verus can often complete these proofs of termination automatically, it sometimes needs additional help with the proof.

Fuel and reasoning about recursive functions

Given the definition of triangle above, we can make some assertions about it:

fn test_triangle_fail() {

assert(triangle(0) == 0); // succeeds

assert(triangle(10) == 55); // FAILS

}The first assertion, about triangle(0), succeeds.

But somewhat surprisingly, the assertion assert(triangle(10) == 55) fails,

despite the fact that triangle(10) really is

equal to 55.

We’ve just encountered a limitation of automated reasoning:

SMT solvers cannot automatically prove all true facts about all recursive functions.

For nonrecursive functions,

an SMT solver can reason about the functions simply by inlining them.

For example, if we have a call min(a + 1, 5) to the min function:

spec fn min(x: int, y: int) -> int {

if x <= y {

x

} else {

y

}

}the SMT solver can replace min(a + 1, 5) with:

if a + 1 <= 5 {

a + 1

} else {

5

}

which eliminates the call. However, this strategy doesn’t completely work with recursive functions, because inlining the function produces another expression with a call to the same function:

triangle(x) = if x == 0 { 0 } else { x + triangle(x - 1) }

Naively, the solver could keep inlining again and again, producing more and more expressions, and this strategy would never terminate:

triangle(x) = if x == 0 { 0 } else { x + triangle(x - 1) }

triangle(x) = if x == 0 { 0 } else { x + (if x - 1 == 0 { 0 } else { x - 1 + triangle(x - 2) }) }

triangle(x) = if x == 0 { 0 } else { x + (if x - 1 == 0 { 0 } else { x - 1 + (if x - 2 == 0 { 0 } else { x - 2 + triangle(x - 3) }) }) }

To avoid this infinite inlining,

Verus limits the number of recursive calls that any given call can spawn in the SMT solver.

This limit is called the fuel;

each nested recursive inlining consumes one unit of fuel.

By default, the fuel is 1, which is just enough for assert(triangle(0) == 0) to succeed

but not enough for assert(triangle(10) == 55) to succeed.

To increase the fuel to a larger amount,

we can use the reveal_with_fuel directive:

fn test_triangle_reveal() {

proof {

reveal_with_fuel(triangle, 11);

}

assert(triangle(10) == 55);

}Here, 11 units of fuel is enough to inline the 11 calls

triangle(0), …, triangle(10).

Note that even if we only wanted to supply 1 unit of fuel,

we could still prove assert(triangle(10) == 55) through a long series of assertions:

fn test_triangle_step_by_step() {

assert(triangle(0) == 0);

assert(triangle(1) == 1);

assert(triangle(2) == 3);

assert(triangle(3) == 6);

assert(triangle(4) == 10);

assert(triangle(5) == 15);

assert(triangle(6) == 21);

assert(triangle(7) == 28);

assert(triangle(8) == 36);

assert(triangle(9) == 45);

assert(triangle(10) == 55); // succeeds

}This works because 1 unit of fuel is enough to prove assert(triangle(0) == 0),

and then once we know that triangle(0) == 0,

we only need to inline triangle(1) once to get:

triangle(1) = if 1 == 0 { 0 } else { 1 + triangle(0) }

Now the SMT solver can use the previously computed triangle(0) to simplify this to:

triangle(1) = if 1 == 0 { 0 } else { 1 + 0 }

and then produce triangle(1) == 1.

Likewise, the SMT solver can then use 1 unit of fuel to rewrite triangle(2)

in terms of triangle(1), proving triangle(2) == 3, and so on.

However, it’s probably best to avoid long series of assertions if you can,

and instead write a proof that makes it clear why the SMT proof fails by default

(not enough fuel) and fixes exactly that problem:

fn test_triangle_assert_by() {

assert(triangle(10) == 55) by {

reveal_with_fuel(triangle, 11);

}

}Recursive exec and proof functions, proofs by induction

The previous section introduced a specification for triangle numbers. Given that, let’s try a series of executable implementations of triangle numbers, starting with a simple recursive implementation:

fn rec_triangle(n: u32) -> (sum: u32)

ensures

sum == triangle(n as nat),

{

if n == 0 {

0

} else {

n + rec_triangle(n - 1) // FAILS: possible overflow

}

}We immediately run into one small practical difficulty: the implementation needs to use a finite-width integer to hold the result, and this integer may overflow:

error: possible arithmetic underflow/overflow

|

| n + rec_triangle(n - 1) // FAILS: possible overflow

| ^^^^^^^^^^^^^^^^^^^^^^^

Indeed, we can’t expect the implementation to work if the result

won’t fit in the finite-width integer type,

so it makes sense to add a precondition saying

that the result must fit,

which for a u32 result means triangle(n) < 0x1_0000_0000:

fn rec_triangle(n: u32) -> (sum: u32)

requires

triangle(n as nat) < 0x1_0000_0000,

ensures

sum == triangle(n as nat),

decreases n,

{

if n == 0 {

0

} else {

n + rec_triangle(n - 1)

}

}This time, verification succeeds.

It’s worth pausing for a few minutes, though, to understand why the verification succeeds.

For example, an execution of rec_triangle(10)

performs 10 separate additions, each of which could potentially overflow.

How does Verus know that none of these 10 additions will overflow,

given just the initial precondition triangle(10) < 0x1_0000_0000?

The answer is that each instance of triangle(n) for n != 0

makes a recursive call to triangle(n - 1),

and this recursive call must satisfy the precondition triangle(n - 1) < 0x1_0000_0000.

Let’s look at how this is proved.

If we know triangle(n) < 0x1_0000_0000 from rec_triangle’s precondition

and we use 1 unit of fuel to inline the definition of triangle once,

we get:

triangle(n) < 0x1_0000_0000

triangle(n) = if n == 0 { 0 } else { n + triangle(n - 1) }

In the case where n != 0, this simplifies to:

triangle(n) < 0x1_0000_0000

triangle(n) = n + triangle(n - 1)

From this, we conclude n + triangle(n - 1) < 0x1_0000_0000,

which means that triangle(n - 1) < 0x1_0000_0000,

since 0 <= n, since n has type u32, which is nonnegative.

Intuitively, you can imagine that as rec_triangle executes,

proofs about triangle(n) < 0x1_0000_0000 gets passed down the stack to the recursive calls,

proving triangle(10) < 0x1_0000_0000 in the first call,

then triangle(9) < 0x1_0000_0000 in the second call,

triangle(8) < 0x1_0000_0000 in the third call,

and so on.

(Of course, the proofs don’t actually exist at run-time —

they are purely static and are erased before compilation —

but this is still a reasonable way to think about it.)

Towards an imperative implementation: mutation and tail recursion

The recursive implementation presented above is easy to write and verify,

but it’s not very efficient, since it requires a lot of stack space for the recursion.

Let’s take a couple small steps towards a more efficient, imperative implementation

based on while loops.

First, to prepare for the mutable variables that we’ll use in while loops,

let’s switch sum from being a return value to being a mutably updated variable:

fn mut_triangle(n: u32, sum: &mut u32)

requires

triangle(n as nat) < 0x1_0000_0000,

ensures

*sum == triangle(n as nat),

decreases n,

{

if n == 0 {

*sum = 0;

} else {

mut_triangle(n - 1, sum);

*sum = *sum + n;

}

}From the verification’s point of view, this doesn’t change anything significant.

Internally, when performing verification,

Verus simply represents the final value of *sum as a return value,

making the verification of mut_triangle essentially the same as

the verification of rec_triangle.

Next, let’s try to eliminate the excessive stack usage by making the function

tail recursive.

We do this by introducing an index variable idx that counts up from 0 to n,

just as a while loop would do:

fn tail_triangle(n: u32, idx: u32, sum: &mut u32)

requires

idx <= n,

*old(sum) == triangle(idx as nat),

triangle(n as nat) < 0x1_0000_0000,

ensures

*sum == triangle(n as nat),

{

if idx < n {

let idx = idx + 1;

*sum = *sum + idx;

tail_triangle(n, idx, sum);

}

}In the preconditions and postconditions,

the expression *old(sum) refers to the initial value of *sum,

at the entry to the function,

while *sum refers to the final value, at the exit from the function.

The precondition *old(sum) == triangle(idx as nat) specifies that

as tail_triangle executes more and more recursive calls,

sum accumulates the sum 0 + 1 + ... + idx.

Each recursive call increases idx by 1 until idx reaches n,

at which point sum equals 0 + 1 + ... + n and the function simply returns sum unmodified.

When we try to verify tail_triangle, though, Verus reports an error about possible overflow:

error: possible arithmetic underflow/overflow

|

| *sum = *sum + idx;

| ^^^^^^^^^^

This may seem perplexing at first:

why doesn’t the precondition triangle(n as nat) < 0x1_0000_0000

automatically take care of the overflow,

as it did for rec_triangle and mut_triangle?

The problem is that we’ve reversed the order of the addition and the recursive call.

rec_triangle and mut_triangle made the recursive call first,

and then performed the addition.

This allowed them to prove all the necessary

facts about overflow first in the series of recursive calls

(e.g. proving triangle(10) < 0x1_0000_0000, triangle(9) < 0x1_0000_0000,

…, triangle(0) < 0x1_0000_0000)

before doing the arithmetic that depends on these facts.

But tail_triangle tries to perform the arithmetic first,

before the recursion,

so it never has a chance to develop these facts from the original

triangle(n) < 0x1_0000_0000 assumption.

Proofs by induction

In the example of computing triangle(10),

we need to know triangle(0) < 0x1_0000_0000,

then triangle(1) < 0x1_0000_0000,

and so on, but we only know triangle(10) < 0x1_0000_0000 to start with.

If we somehow knew that

triangle(0) <= triangle(10),

triangle(1) <= triangle(10),

and so on,

then we could derive what we want from triangle(10) < 0x1_0000_0000.

What we need is a lemma that proves that if i <= j,

then triangle(i) <= triangle(j).

In other words, we need to prove that triangle is monotonic.

We can use a proof function to implement this lemma:

proof fn triangle_is_monotonic(i: nat, j: nat)

ensures

i <= j ==> triangle(i) <= triangle(j),

decreases j,

{

// We prove the statement `i <= j ==> triangle(i) <= triangle(j)`

// by induction on `j`.

if j == 0 {

// The base case (`j == 0`) is trivial since it's only

// necessary to reason about when `i` and `j` are both 0.

// So no proof lines are needed for this case.

}

else {

// In the induction step, we can assume the statement is true

// for `j - 1`. In Verus, we can get that fact into scope with

// a recursive call substituting `j - 1` for `j`.

triangle_is_monotonic(i, (j - 1) as nat);

// Once we know it's true for `j - 1`, the rest of the proof

// is trivial.

}

}

The proof is by induction on j,

where the base case of the induction is i == j

and the induction step relates j - 1 to j.

In Verus, the induction step is implemented as a recursive call from the proof to itself

(in this example, this recursive call is line triangle_is_monotonic(i, (j - 1) as nat)).

As with recursive spec functions,

recursive proof functions must terminate and need a decreases clause.

Otherwise, it would be easy to prove false,

as in the following non-terminating “proof”:

proof fn circular_reasoning()

ensures

false,

{

circular_reasoning(); // FAILS, does not terminate